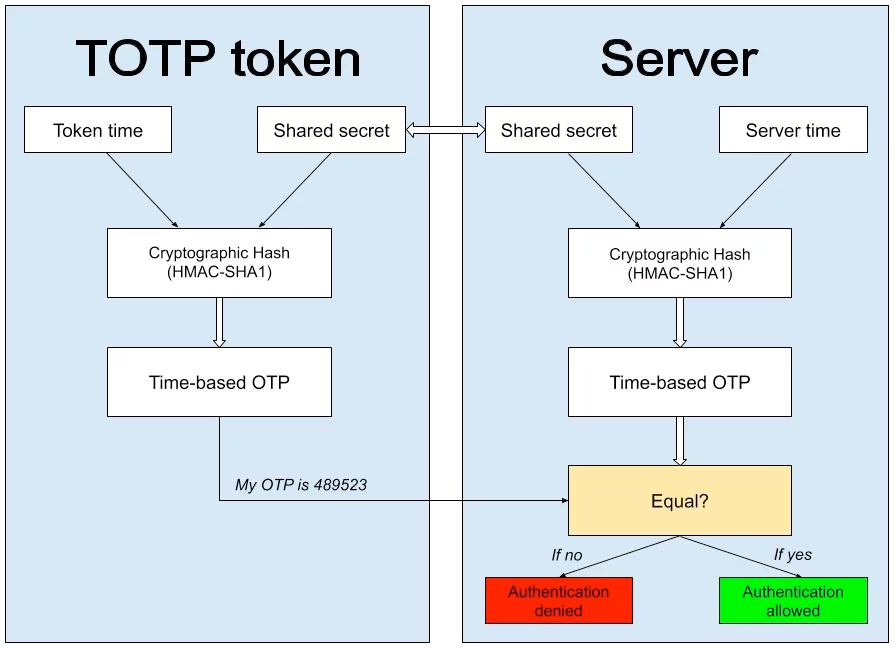

My first formal IT helpdesk role was basically "resetting stuff". I would get a ticket, an email or a phone call and would take the troubleshooting as far as I could go. Reset the password, check the network connection, confirm the clock time was right, ensure the issue persisted past a reboot, check the logs and see if I could find the failure event, then I would package the entire thing up as a ticket and escalate it up the chain.

It was effectively on the job training. We were all trying to get better at troubleshooting to get a shot at one of the coveted SysAdmin jobs. Moving up from broken laptops and desktops to broken servers was about as big as 22 year old me dreamed.

Sometimes people would (rightfully) observe that they were spending a lot of time interacting with us, while the more senior IT people were working quietly behind us and they could probably fix the issue immediately. We would explain that, while that was true, our time was less valuable than theirs. Our role was to eliminate all of the most common causes of failure then to give them the best possible information to take the issue and continue looking at it.

There are people who understand waiting in a line and there are people who make a career around skipping lines. These VIPs encountered this flow in their various engineering organizations and decided that a shorter line between their genius and the cogs making the product was actually the "secret sauce" they needed.

Thus, Slack was born, a tool pitched to the rank and file as a nicer chat tool and to the leadership as a all-seeing eye that allowed them to plug directly into the nervous system of the business and get instant answers from the exact right person regardless of where they were or what they were doing.

My job as a professional Slacker

At first Slack-style chat seemed great. Email was slow and the signal to noise ratio was off, while other chat systems I had used before at work either didn't preserve state, so whatever conversation happened while you were offline didn't get pushed to you, or they didn't scale up to large conversations well. Both XMPP and IRC has the same issue, which is if you were there when the conversation was happening you had context, but otherwise no message history for you.

There were attempts to resolve this (https://xmpp.org/extensions/xep-0313.html) but support among clients was all over the place. The clients just weren't very good and were constantly going through cycles of intense development only to be abandoned. It felt like when an old hippie would tell you about Woodstock. "You had to be there, man".

Slack brought channels and channels bought a level of almost voyeurism into what other teams were doing. I knew exactly what everyone was doing all the time, down to I knew where the marketing team liked to go for lunch. Responsiveness became the new corporate religion and I was a true believer. I would stop walking in the hallway to respond to a DM or answer a question I knew the answer to, ignoring the sighs of frustration as people walked around my hoodie-clad roadblock of a body.

Sounds great, what's the issue?

So what's the catch? Well I first noticed it on the train. My daily commute home through the Chicago snowy twilight used to be a sacred ritual of mental decompression. A time to sift through the day's triumphs and (more often) the screw-ups. What needed fixing tomorrow? What problem had I pushed off maybe one day too long?

But as I got further and further into Slack, I realized I was coming home utterly drained yet strangely...hollow. I hadn't done any actual work that day.

My days had become a never-ending performance of "work". I was constantly talking about the work, planning the work, discussing the requirements of the work, and then in a truly Sisyphean twist, linking new people to old conversations where we had already discussed the work to get them up to speed on our conversation. All the while diligently monitoring my channels, a digital sentry ensuring no question went unanswered, no emoji not +1'd. That was it, that was the entire job.

Show up, spend eight hours orchestrating the idea of work, and then go home feeling like I'd tried to make a sandcastle on the beach and getting upset when the tide did what it always does. I wasn't making anything, I certainly wasn't helping our users or selling the product. I was project managing, but poorly, like a toddler with a spreadsheet.

And for the senior engineers? Forget about it. Why bother formulating a coherent question for a team channel when you could just DM the poor bastard who wrote the damn code in the first place? Sure, they could push back occasionally, feigning busyness or pointing to some obscure corporate policy about proper channel etiquette. But let's be real. If the person asking was important enough (read: had a title that could sign off on their next project), they were answering. Immediately.

So, you had your most productive people spending their days explaining why they weren't going to answer questions they already knew the answer to, unless they absolutely had to. It's the digital equivalent of stopping a concert pianist to teach you "Twinkle Twinkle Little Star" 6 times a day.

It's a training problem too

And don't even get me started on the junior folks. Slack was actively robbing them of the chance to learn. Those small, less urgent issues? That's where the real education happens. You get to poke around in the systems, see how the gears grind, understand the delicate dance of interconnectedness. But why bother troubleshooting when Jessica, the architect of the entire damn stack, could just drop the answer into a DM in 30 seconds? People quickly figured out the pecking order. Why wait four hours for a potentially wrong answer when the Oracle of Code was just a direct message away?

You think you are too good to answer questions???

Au contraire! I genuinely enjoy feeling connected to the organizational pulse. I like helping people. But that, my friends, is the digital guillotine. The nice guys (and gals) finish last in this notification-driven dystopia. The jerks? They thrive. They simply ignore the incoming tide of questions, their digital silence mistaken for deep focus. And guess what? People eventually figure out who will respond and only bother those poor souls. Humans are remarkably adept at finding the path of least resistance, even if it leads directly to someone else's burnout.

Then comes review time. The jerk, bless his oblivious heart, has been cranking out code, uninterrupted by the incessant digital demands. He has tangible projects to point to, gleaming monuments to his uninterrupted focus. The nice person, the one everyone loves, the one who spent half their day answering everyone else's questions? Their accomplishments are harder to quantify. "Well, they were really helpful in Slack..." doesn't quite have the same ring as "Shipped the entire new authentication system."

It's the same problem with being the amazing pull request reviewer. Your team appreciates you, your code quality goes up, you’re contributing meaningfully. But how do you put a number on "prevented three critical bugs from going into production"? You can't. So, you get a pat on the back and maybe a gift certificate to a mediocre pizza place.

Slackifying Increases

Time marches on, and suddenly, email is the digital equivalent of that dusty corner in your attic where you throw things you don't know what to do with. It's a wasteland of automated notifications from systems nobody cares about. But Slack? There’s no rhyme or reason to it. Can I message you after hours with the implicit understanding you'll ignore it until morning? Should I schedule the message for later, like some passive-aggressive digital time bomb?

And the threads! Oh, the glorious, nested chaos of threads. Should I respond in a thread to keep the main channel clean? Or should I keep it top-level so that if there's a misunderstanding, the whole damn team can pile on and offer their unsolicited opinions? What about DMs? Is there a secret protocol there? Or is it just a free-for-all of late-night "u up?" style queries about production outages?

It felt like every meeting had a pre-meeting in Slack to discuss the agenda, followed by an actual meeting on some other platform to rehash the same points, and then a post-meeting discussion in a private channel to dissect the meeting itself. And inevitably, someone who missed the memo would then ask about the meeting in the public channel, triggering a meta-post-meeting discussion about the pre-meeting, the meeting, and the initial post-meeting discussion.

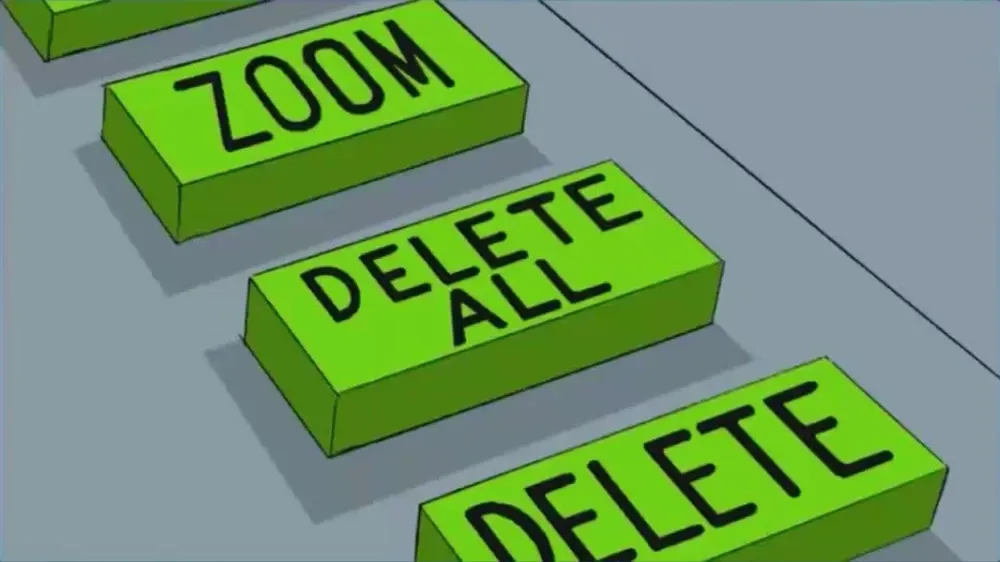

The only way I could actually get any work done was to actively ignore messages. But then, of course, I was completely out of the loop. The expectation became this impossible ideal of perfect knowledge, of being constantly aware of every initiative across the entire company. It was like trying to play a gameshow and write a paper at the same time. To be seen as "on it", I needed to hit the buzzer and answer the question, but come review time none of those points mattered and the scoring was made up.

I was constantly forced to choose: stay informed or actually do something. If I chose the latter, I risked building the wrong thing or working with outdated information because some crucial decision had been made in a Slack channel I hadn't dared to open for fear of being sucked into the notification vortex. It started to feel like those brief moments when you come up for air after being underwater for too long. I'd go dark on Slack for a few weeks, actually accomplish something, and then spend the next week frantically trying to catch up on the digital deluge I'd missed.

Attention has a cost

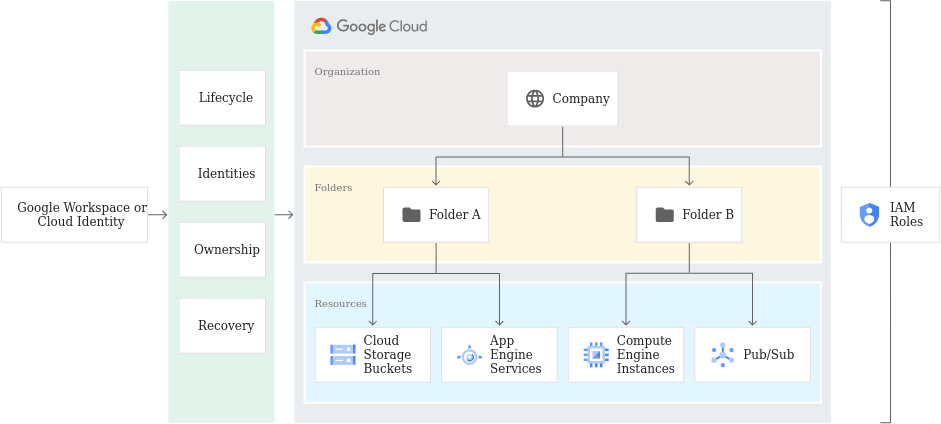

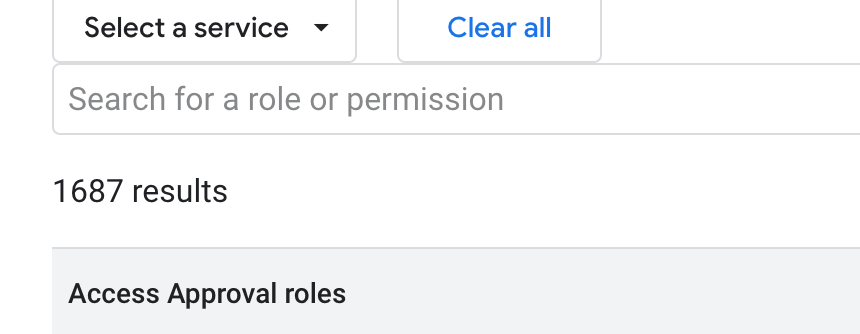

One of the hardest lessons for anyone to learn is the profound value of human attention. Slack is a fantastic tool for those who organize and monitor work. It lets you bypass the pesky hierarchy, see who's online, and ensure your urgent request doesn't languish in some digital abyss. As an executive, you can even cut out middle management and go straight to the poor souls actually doing the work. It's digital micromanagement on steroids.

But if you're leading a team that's supposed to be building something, I'd argue that Slack and its ilk are a complete and utter disaster. Your team's precious cognitive resources are constantly being bled dry by a relentless stream of random distractions from every corner of the company. There are no real controls over who can interrupt you or how often. It's the digital equivalent of having your office door ripped off its hinges and replaced with glass like a zoo. Visitors can come and peer in on what your team is up to.

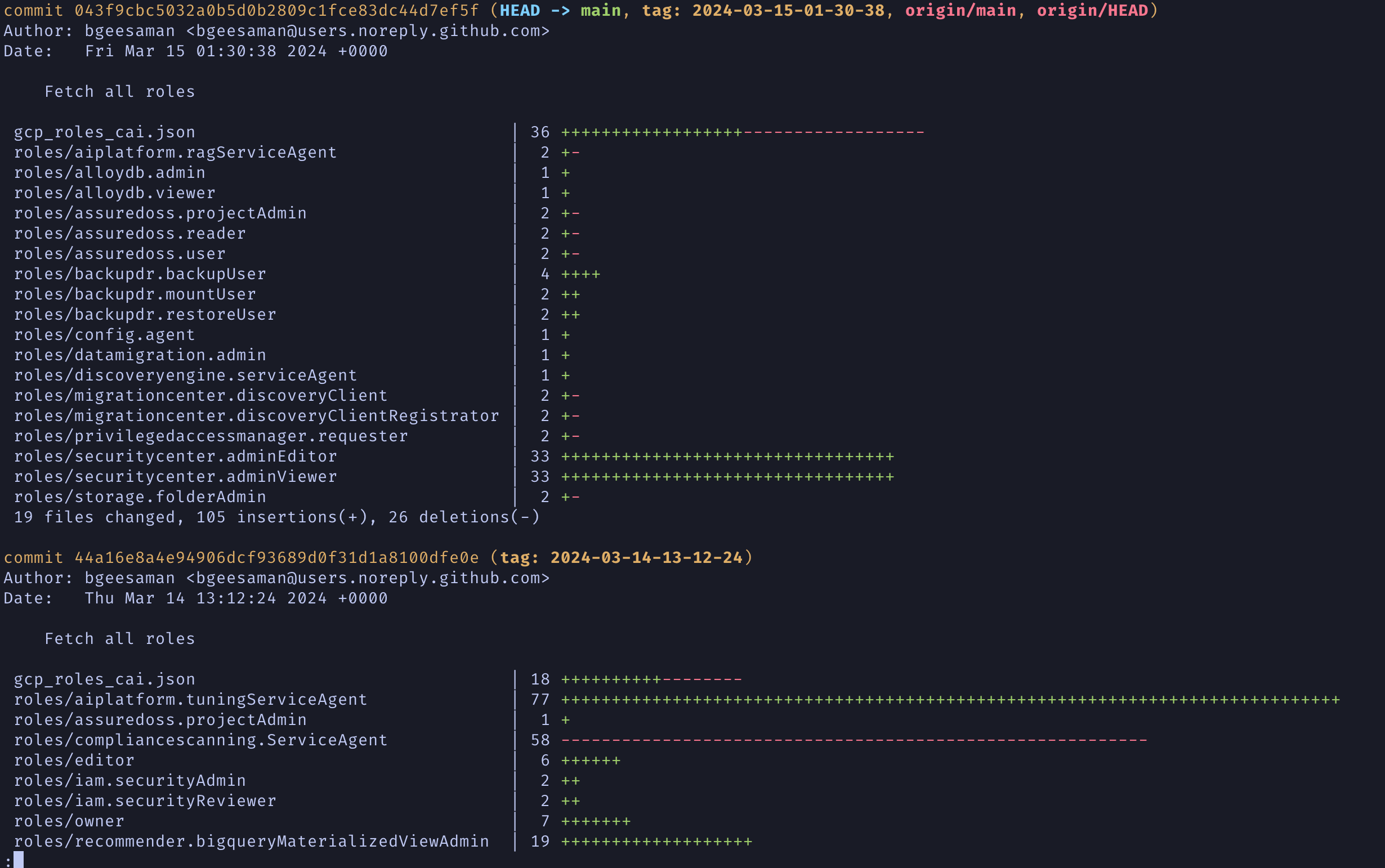

Turns out, the lack of history in tools like XMPP and IRC wasn't a bug, it was a feature. If something important needed to be preserved, you had to consciously move it to a more permanent medium. These tools facilitated casual conversation without fostering the expectation of constant, searchable digital omniscience.

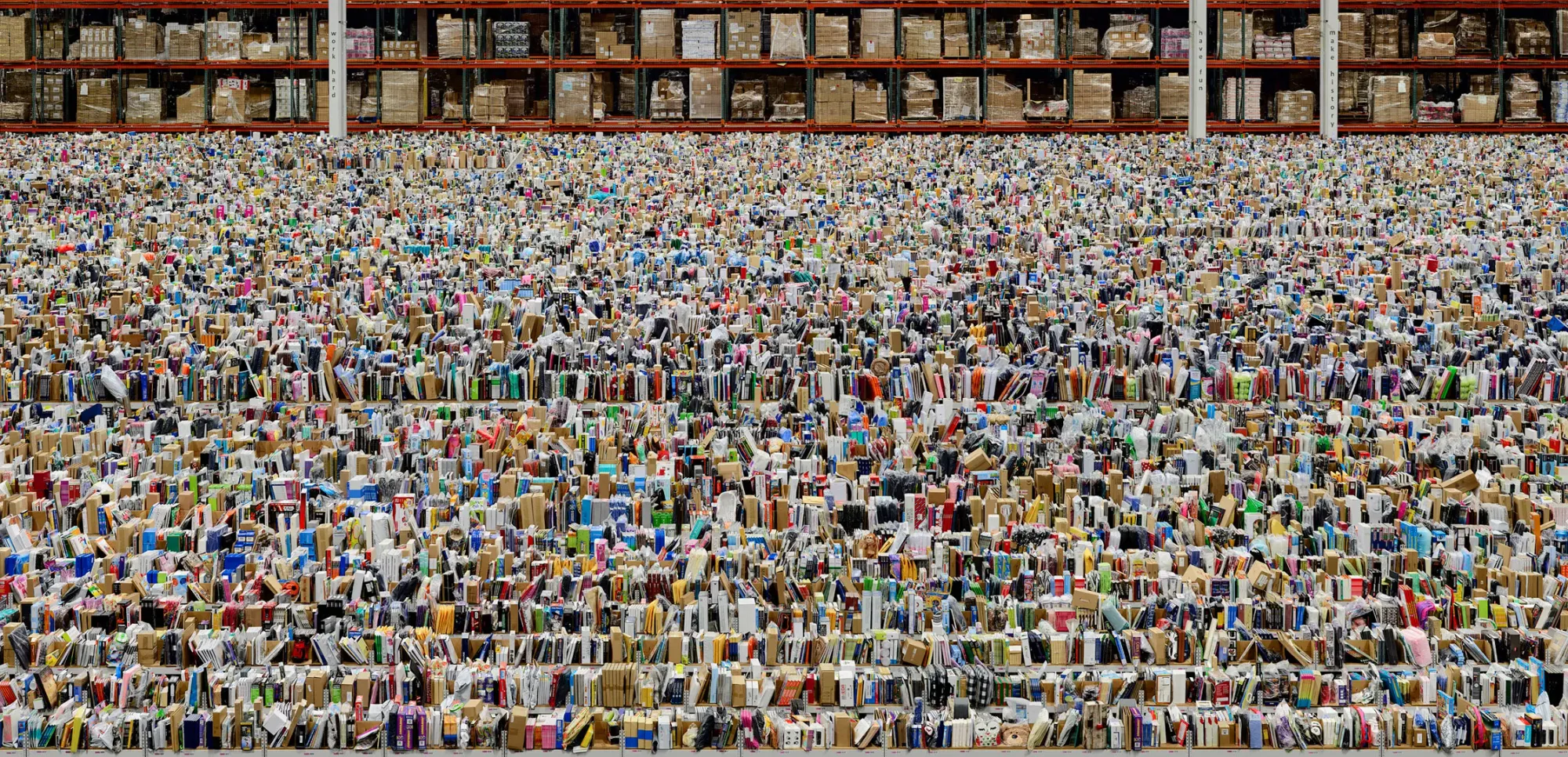

Go look at the Slack for any large open-source project. It's pure, unadulterated noise. A cacophony of voices shouting into the void. Developers are forced to tune out, otherwise it's all they'd do all day. Users have a terrible experience because it's just a random stream of consciousness, people asking questions to other people who are also just asking questions. It's like replacing a structured technical support system with a giant conference call where everyone is on hold and told to figure it out amongst themselves.

My dream

So, what do I even want here? I know, I know, it's a fool's errand. We're all drowning in Slack clones now. You can't stop this productivity-killing juggernaut. It's like trying to un-ring a bell, or perhaps more accurately, trying to silence a thousand incessantly pinging notifications.

But I disagree. I still think it's not too late to have a serious conversation about how many hours a day it's actually useful for someone to spend on Slack. What do you, as a team, even want out of a chat client? For many teams, especially smaller ones, it makes far more sense to focus your efforts where there's a real payoff. Pick one tool, one central place for conversations, and then just…turn off the rest. Everyone will be happier, even if the tool you pick has limitations, because humans actually thrive within reasonable constraints. Unlimited choice, as it turns out, is just another form of digital torture.

Try to get away with the most basic, barebones thing you can for as long as you can. I knew a (surprisingly productive) team that did most of their conversation on an honest-to-god phpBB internal forum. Another just lived and died in GitHub with Issues. Just because it's a tool a lot of people talk about doesn't make it a good tool and just because it's old, doesn't make it useless.

As for me? I'll be here, with my Slack and Teams and Discord open trying to see if anything has happened in any of the places I'm responsible for seeing if something has happened. I will consume gigs of RAM on what, even ten years ago, would have been an impossibly powerful computer to watch basically random forum posts stream in live.