I was talking to a friend recently, a non-technical employee at a large company. He was complaining about his mouse making strange noises. Curious, I asked what he had been doing. "Oh well I need to fill in these websites every quarter with information I get off an Excel sheet. It takes about 30 hours to do and I need to click around a lot to do it." I was a bit baffled and asked him to elaborate, which he did, explaining that there was an internal business process where he got an Excel spreadsheet full of tags. He had to go into an internal website and make sure the tags on their internal app matched the spreadsheet.

"Surely you all have a development team or someone who can help automate that?" I asked, a little shocked at what he was saying. "No well we asked but they just don't have the spare capacity right now, so we're gonna keep doing it this year." He went on to explain this wasn't even the only one of these he had to do, in fact there were many web pages where he needed to manually update information, seemingly only possible through his browser. I thought of the thousands of people at just this company who are spending tens of thousands of hours copy and pasting from a spreadsheet.

When I was a kid and first introduced to computers this was exactly the sort of tedium they were supposed to help with. If you did all the work of getting the data into the computer and had everything set up, part of the sales pitch of a computer in every workplace was the incredible amount of time saved. But this didn't seem any better as compared to him pulling paper files and sorting them. What happened? Weren't we supposed to have fixed this already?

My First Automation

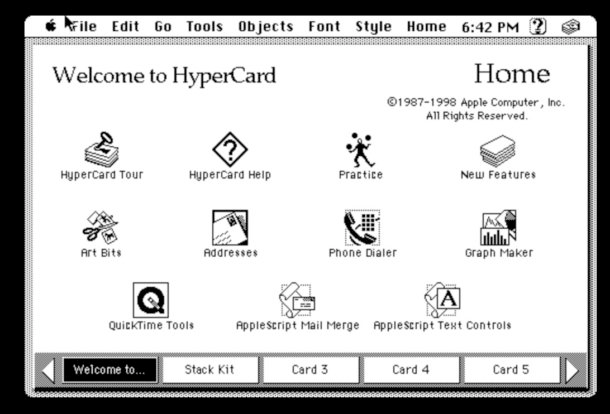

My first introduction to the concept of programming was a technology called HyperCard. HyperCard was a very basic GUI scripting program, which allowed you to create stacks of black and white cards. Apple gave you a series of GUI controls that you could drag and drop into the cards and you linked everything together with HyperTalk, a programming language that still structurally looks "correct" to me.

put the value of card field "typehere" into theValue is an example of a HyperTalk command, but you actually had a lot of interesting options available to you. This was my first introduction to the idea of basically prompting the user for input with commands like:

onmouseUp

answer "This is an example of the answer command" with "Reply 1" or -. "Reply 2" or "Reply 3"

endmouseUpOne of the things I enjoyed doing a lot was trying to script art, making it seem like I had drawn something on the computer that in fact had been done through HyperTalk. Those commands looked something like this:

choose spray can tool

drag from 300,100 to 400, 200 wait 1 second

choose eraser tool

drag from 300,100 to 400, 200 choose browse tool

endmouseUp

The syntax was very readable and easy to debug along with being very forgiving. HyperCard stacks weren't presented as complicated or difficult, they were supposed to be fun. What I remember about HyperCard vs the programming tools I would be exposed to later was it was impossible to get into an unrecoverable state. What I mean is backing out of a card while keeping the stack intact didn't require any special knowledge, a kid could figure it out with very little instruction.

For those who never got the chance to try it out: check out this great emulator here.

Automator and AppleScript

Years went by and my interest in IT continued, but for the most part this didn't involve a lot of scripting or programming. In the late 90s and early 2000s the focus for IT was all about local GUI applications, trying to transition power tools out of the terminal and into a place where they looked more like normal applications. Even things like server management and LDAP configuration mostly happened through GUI tools, with a few PowerShell or Bash scripts used on a regular basis.

The problem with this approach is the same problem with modern web applications, which is its great for the user who is doing this for the first time to have all this visual information and guides on what to do. For someone doing it for the 5000th time, there's a lot of slowness inherit in the design. The traditional path folks took in IT was to basically abandon all GUI tooling at that point if possible, leaving people lower on the totem pole to suffer through clicking through screens a thousand times.

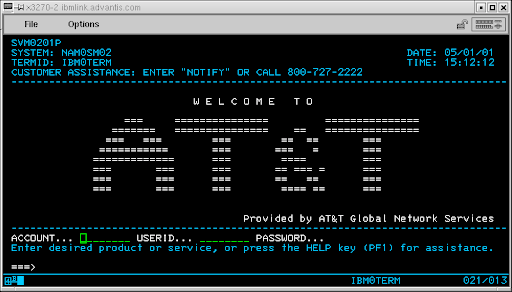

I was primarily a Mac user supporting other Mac users, meaning I was very much considered a niche. Apple was considered all but dead at the time, with IT teams preferring to silo off the few departments they couldn't force to adopt windows onto a junior helpdesk person. Mostly my job was setting up the odd Mac server, debugging why Active Directory logins weren't working, nothing too shocking. However it was here when I started to encounter this category of non-programmers writing programs to solve surprisingly important problems.

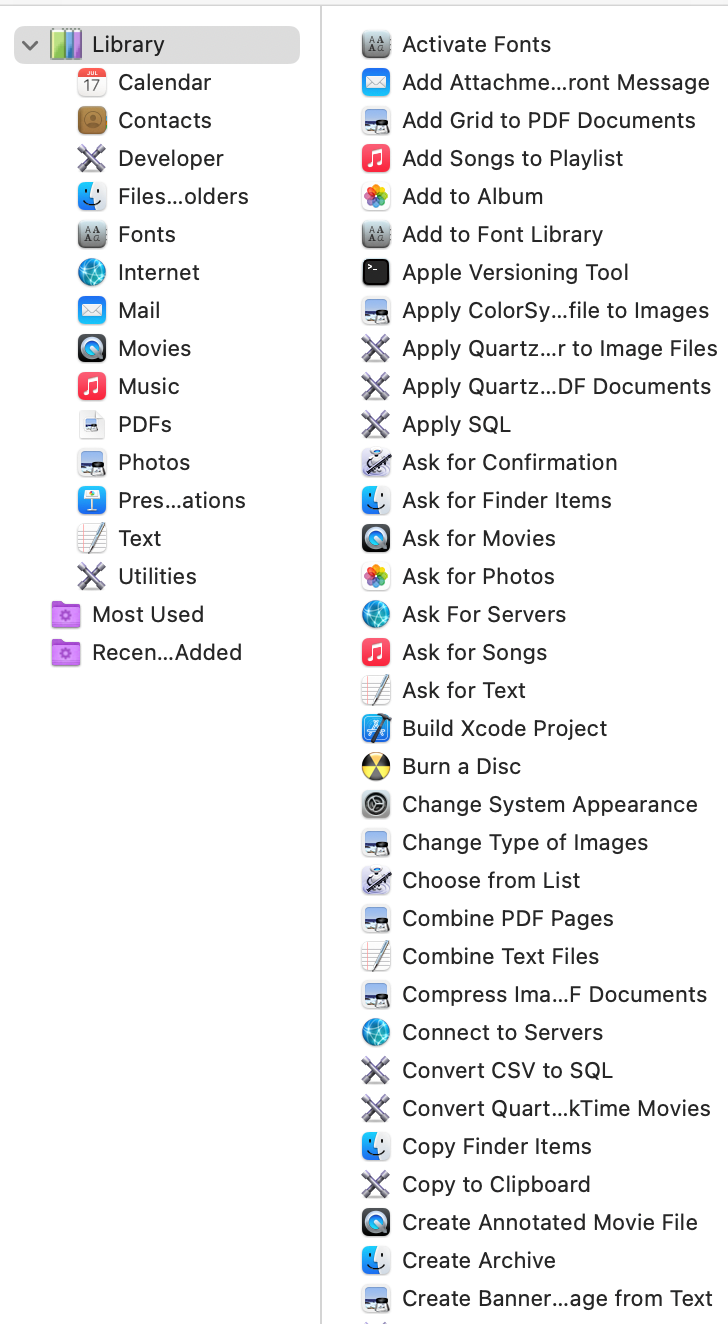

You would discover these Macs running in higher education or in design studios with a post-it note on it saying "Don't turn off". Inevitably there would be some sort of AppleScript and/or Automator process running, resizing files or sending emails, sometimes even connecting to databases (or most often using Excel as a database). I saw grading tools that would take CSVs, convert them into SQL and then put them into a database as a common example.

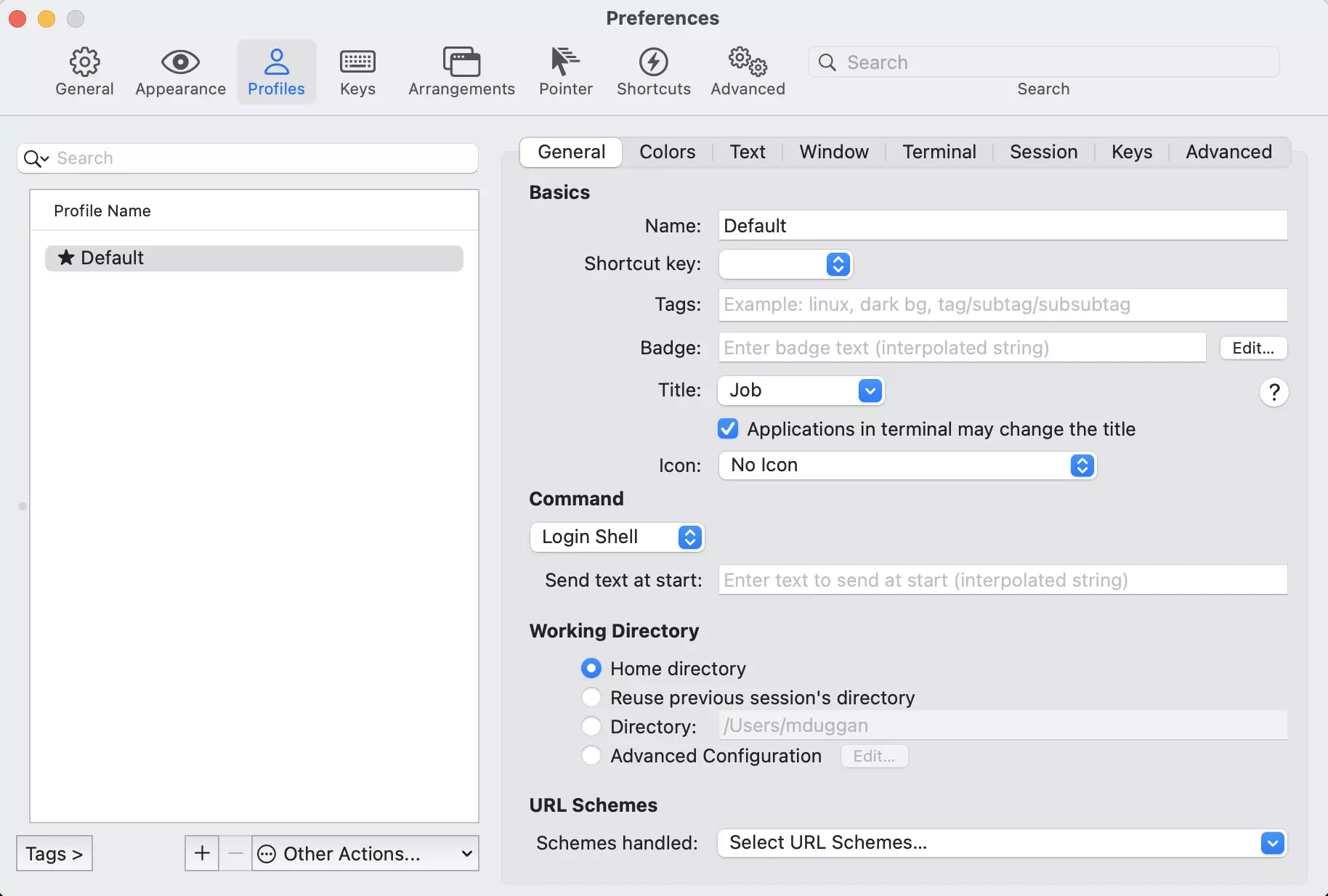

For those who haven't used Apple Automator, part of the reason I think people liked it for these tasks in Mac-heavy environments is because they're difficult to mess up. You drag and drop a series of commands into a Workflow and then run the Workflow.

After discussing it with users over the years, a similar story emerged about how these workflows came to exist.

- Someone technical introduced the user to the existence of Automator because they started using it to automate a task.

- The less-technical user "recorded" themselves doing something they needed to do a thousand times. The Automator record basically just captured what you did with a mouse and ran it again when you hit play.

- Eventually they'd get more comfortable with the interface and move away from recording and move towards the workflows since they were more reliable.

- Or if they did batch file processing, they'd go with a Folder Action, which triggered something when an item was placed in a folder.

- At some point they'd run into the limit of what that could to and call out to either a Bash script or an AppleScript depending on whatever the more technical person they had access to knew.

Why do this?

For a lot of them, this was the only route to automation they were ever going to have. They weren't programmers, they didn't want to become programmers but they needed to automate some task. Automator isn't an intimidating tool, the concept of "record what my mouse does and do it again" makes sense to users. As time went on and more functionality was added to these homegrown programs, they became mission-critical, often serving as the only way that these tasks were done.

From an IT perspective that's a nightmare. I found myself in an uncomfortable place being asked to support what were basically "hacks" around missing functionality or automation in applications. I decided early on that it was at least going to be a more interesting part of my job and so I steered into it and found myself really enjoying the process. AppleScript wasn't an amazing language to write in but the documentation was pretty good and I loved how happy it made people.

For many of you reading I'm sure you rolled your eyes a bit at that part, how happy it made people. I cannot stress enough some of the most impactful programming I maybe have ever done was during this period. You are, quite literally, freeing people to do other things. I had teachers telling me they were staying late 5+ hours a week to do the things we could automate and run. This form of basic programming with the end customer right there providing you direct feedback on whether it works is incredibly empowering for both parties.

It was also very easy to turn into a feature request. Think of all the bad internal tooling feature requests you get, with complicated explanations or workflows that make no sense when they're written down. Instead you had the full thing, from beginning to end, with all the steps laid out. I noticed a much shorter pipeline from "internal request" to "shipped feature" with these amateur automations I think in part to the clarity of the request that comes hand-in-hand with already seeing how the request works end to end.

As time went on, less and less work happened in desktop applications. Platform specific tooling like Automator and AppleScript became less relevant as the OS hooks they need to operate aren't present or can't be guaranteed. You can't really depend on mouse placement on a web page where the entire layout might change any second.

Now we're back at a place where I see people often wasting a ton of time doing exactly the sort of thing computers were supposed to prevent. Endless clicking and dragging, mind-numbing repetition of selecting check-boxes and copy/pasting values in form fields. The ideas present in technology like HyperCard and AppleScript have never been more needed in normal non-technical peoples lives, but there just doesn't seem to be any tooling for it in a browser world. Why?

What about RPA?

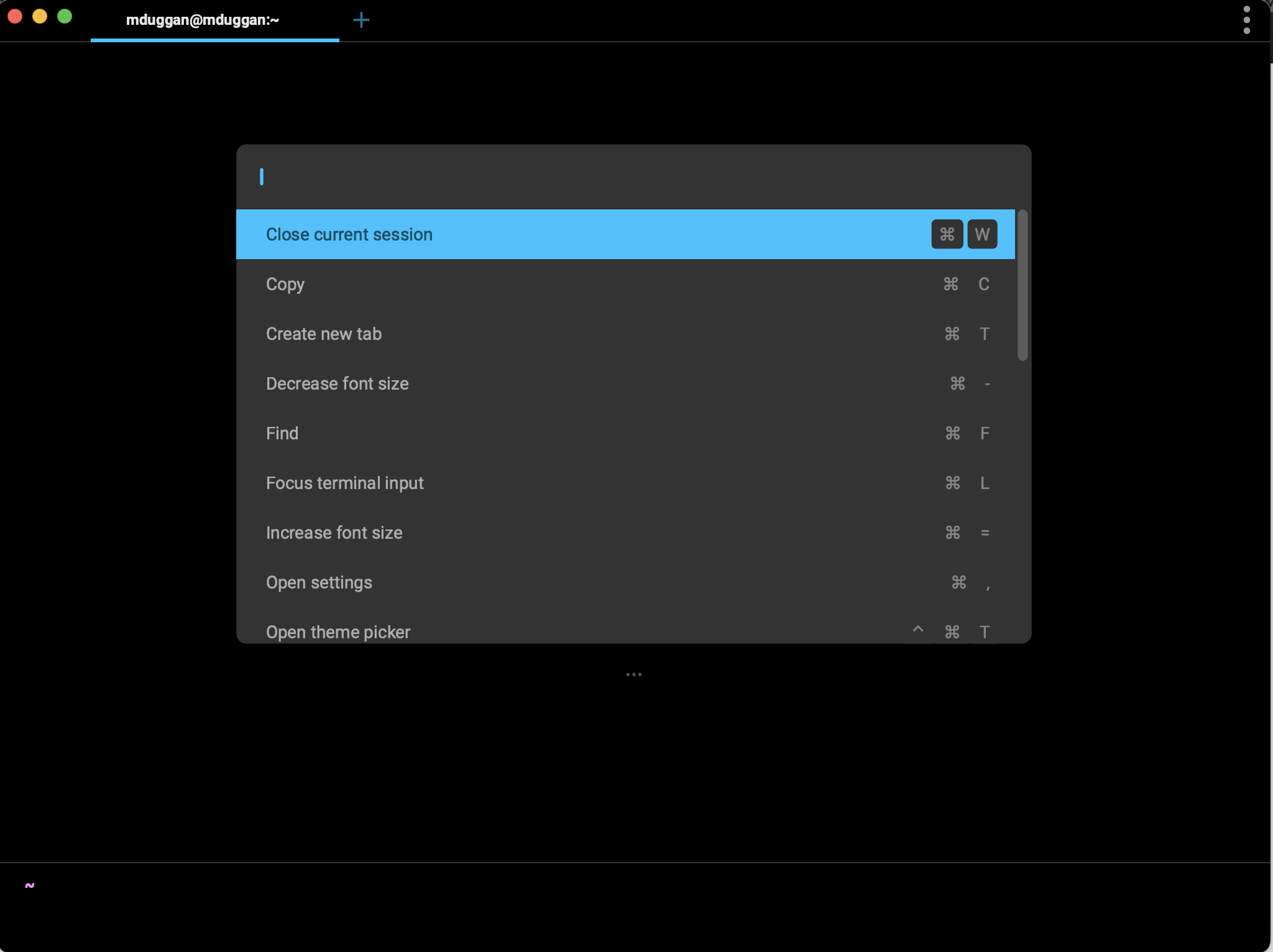

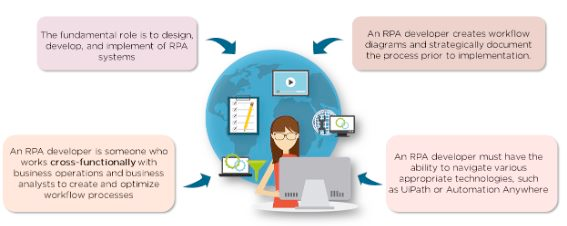

RPA, or "robotic process automation", is the attempted reintroduction of these old ideas into the web space. There are a variety of tools and the ones I've tried are UIPath and OpenRPA. Both have similar capabilities although UIPath is certainly more packed with features. The basic workflow is similar, but there are some pretty critical problems with it that make it hard to put into a non-technical users hands.

For those unaware the basic workflow of an RPA tool is mostly browser-based, often with some "orchestrator" process and a "client" process. A user installs a plugin to their browser that records their actions and allows them to replay it. You can then layer in more automation, either using built-in plugins to call APIs or by writing actions that look for things like CSS tags on the page.

Here are some of the big issues with the RPA ecosystem as it exists now:

- There isn't a path for non-technical users to take from "Record" to building resilient workflows that functions unattended for long periods of time. The problem is again that websites are simply not stable enough targets to really program against using what you can see. You need to, at the very least, understand a bit about CSS in order to "target" the thing you want to hit reliably. Desktop applications you control the updates, but websites you don't.

- The way to do this stuff reliably is often through that services API but then we've increased the difficulty exponentially. You now need to understand what an API is, how they work and be authorized by your organization to have a key to access that API. It's not a trivial thing in a large workplace to get a Google Workspaces key, so it unfortunately would be hard to get off the ground to begin with.

- The tools don't provide good feedback to non-technical users about what happened. You just need too much background information about how browsers work, how websites function, what APIs are, etc in order to get even minimal value out of this tooling.

- They're also really hard to setup on your computer. Getting started, running your first automation, is just not something you can do without help if you aren't technically inclined.

I understand why there has been an explosion of interest in the RPA space recently and why I see so many "RPA Developer" job openings. There's a legitimate need here to fill in the gaps between professional programming and task automation that is consuming millions of hours of peoples lives, but I don't think this is the way forward. If your technology relies on the use of existing APIs and professional tech workers to function, then its bringing minimal value to the table over just learning Python and writing a script.

The key secret for a technology like this has to be that the person who understands the nuance of the task has to be able to debug it. If it doesn't work, if the thing doesn't write to the database or whatever, maybe you don't know exactly why that happened, but because you have some context on how the task is done manually there exists the possibility of you fixing it.

You see this all the time in non-technical workplaces, a "black box" understanding of fixes. "Oh if you close that window, wait five minutes and open it again, it'll work" is one I remember hearing in a courthouse I was doing contract work in. Sure enough, that did fix the issue, although how they figured it out remains a mystery to me. People are not helpless in the face of technical problems but it also isn't their full-time job to fix them.

Why not just teach everyone to program?

I hate this ideology and I always have. Human civilization progresses in part due to specialization, that we as a society don't have to learn to do everything in order to keep things functional. There's a good argument to be made that we possibly have gone too far in the other direction, that now we understand too little about how the world around us functions, but that's outside the scope of this conversation.

There is a big difference between "having a task that would benefit from automation" and "being interested in investing the hundreds of hours to learn how to program". For most people it has nothing to do with their skills or interest and would be something they would need to learn in their off-time. But I love to program, won't they? My parents both love being lawyers but I can't think of anything I would want to do less right now.

Giving people a path from automation to "real programming", whatever that means, is great and I fully support it. But creating the expectation that the only way for people to automate tasks is to become an expert in the space is selfish thinking on the part of the people who make those services. It is easier for us if they take on the cognitive load vs if we design stuff that is easier to use and automate.

Is there a way forward to help people automate their lives?

I think there is, but I'm not sure if the modern paradigm for how we ship website designs works with it. Similar to website scraping, automation based on website elements and tags is not a forever solution and it can be difficult, if not impossible, for a user to discover that it isn't working anymore without adding manual checks.

There are tools users can use, things like Zapier that work really well for public stuff. However as more and more internal work moves to the browser, this model breaks down for obvious reasons. What would be great is if there was a way to easily communicate to the automation tool "these are the hooks on the page we promise aren't going to go away", communicated through the CSS or even some sort of "promise" page.

If some stability could be added to the process of basically scraping a webpage, I think the tooling could catch up and at least surface to the user "here are the stable targets on this page". As it exists now though, the industry treats APIs as the stable interface for their services and their sites as dynamic content that changes whenever they want. While understandable to some extent, I think it misses this opportunity to really expand the possibility of what a normal user can do with your web service.

It's now become very much the norm for employees to get onboarded and handed a computer where the most used application is the web browser. This has enabled incredible velocity when it comes to shipping new features for companies and has really changed the way we think about the idea of software. But with this has come cost incurred on the side of the actual user of this software, removing the ability to reliably automate their work.

If there was a way to give people some agency in this process, I think it would pay off hugely. Not only in the sheer hours saved of human life, but also with the quality of life for your end users. For the people who found and embraced desktop automation technology, it really allowed normal people to do pretty incredible things with their computers. I would love to see a future in which normal people felt empowered to do that with their web applications.