I love reading. It is the thing on this earth that brings me the most joy. I attribute no small part of who I am and how I think to the authors who I have encountered in my life. The speed by which LLMs are destroying this ecosystem is a tragedy that we're not going to understand for a generation. We keep talking about it as an optimization, like writing is a factory and books are the products that fly off the line. I think it's a tragedy that will cause people to give up on the idea of writing as a career, closing off a vital avenue for human expression and communication.

Books, like everything else, has evolved in the face of the internet. For a long time publishers were the ultimate gatekeepers and authors tried to eek out an existence by submitting to anyone who would read their stuff. Most books were commercial failures, but some became massive hits. Then eBooks came out and suddenly authors could bypass the publishers and editors of the world to get directly to readers. This was promised to unleash a wave of quality the world had never seen before.

In practice, to put it kindly, eBooks are a mixed success. Some authors benefited greatly from the situation, able to establish strong followings and keep a much higher percentage of their revenue than they would with a conventional publisher. Most released a book, nobody ever read it and that was it. However there was a medium success, where authors could find a niche and generate a pretty reliable stream of income. Not giant numbers, but even 100 copies sold or borrowed under Kindle Unlimited a month spread out across enough titles can let you survive.

AI-written text is quickly filling these niches, since scammers are able to identity lucrative subsections where it might not be worth a year of a persons life to try and write a book this audience will like, but having a machine generate a book and throw it up there is incredibly cheap. I'm seeing them more and more, these free on Kindle Unlimited books with incredibly specific topics that seem tailored towards getting recommended to users in sub-genres.

There is no feeling of betrayal like thinking you are about to read something that another person slaved over, only to discover you've been tricked. They had an idea, maybe even a good idea and instead of putting in the work and actually sitting there crafting something worth my precious hours on this Earth to read, they wasted my time with LLM dribble. Those too formal, politically neutral, long-winded paragraphs stare back at me as the ultimate indictment of how little of a shit the person who "wrote this" cared about my experience reading it. It's like getting served a microwave dinner at a sit down restaurant.

Maybe you don't believe me, or see the problem. Let me at least try to explain why this matters. Why the relationship between author and reader is important and requires mutual respect. Finally why this destruction is going to matter in the decades to come.

TLDR

Since I know a lot of people aren't gonna read the whole thing (which is fine), let me just bulletpoint my responses to anticipated objections addressed later.

- LLMs will let people who couldn't write books before do it. That isn't a perk. Part of the reason people invest so many hours into reading is because we know the author invested far more in writing. The sea of unread, maybe great books, was already huge. This is expanding the problem and breaking the relationship of trust between author and reader.

- It's not different from spellcheck or grammar check. It is though and you know that. Those tools made complex lookups easier against a large collection of rules, this is generating whole blobs of text. Don't be obtuse.

- They let me get my words down with less work. There is a key thing about any creative area but especially in writing that people forget. Good writing kills its darlings. If you don't care enough about a section to write it, then I don't care enough to read it. Save us both time and just cut it.

- Your blog is very verbose. I never said I was a good writer.

- The market will fix the problem. The book market relies on a vast army of unpaid volunteers to effectively sacrifice their time and wade through a sea of trash to find the gems. Throwing more books at them just means more gems get lost. Like any volunteer effort, the pool of people doesn't grow at the same rate as the problem.

- How big of a problem is it? Considering how often I'm seeing them, it feels big, but it is hard to calculate a number. It isn't just me link

Why Does It Matter?

Allow me to veer into my personal background to provide context on why I care. I grew up in small towns across rural Ohio, places where the people who lived there either had no choice but to stay or chose to stay because of the simple lifestyle and absolute consensus on American Christian values. We said the Pledge of Allegiance aggressively, we all went to church on Sunday, gay people didn't exist and the only non-white people we saw were the migrant farm workers who we all pretended didn't exist living in the trailers around farms surrounding the town. As a kid it was fine, children are neither celebrated or hated in this culture, instead we were mostly left alone.

There is a violent edge to these places that people don't see right away. You aren't encouraged to ask a lot of questions about the world around you. We were constantly flooded with religious messaging, at school, home, church, church camp, weekly classes at night or bible studies, movies and television that was specifically encouraged because they had a religious element. Anything outside of this realm was met with a chilly reaction from most adults, if not outright threats of violence. My parents didn't hit me, but I was very much in the minority of my group. More than once we turned the sound up on a videogame or tv to drown out the sobs of a child being struck with a hand or belt while we were at a friends house.

Small town opinion turns on a dime and around 4th grade it turned on me. Everyone knows your status because there aren't a lot of people so I couldn't just go hang out in a new neighborhood. Suddenly I had a lot of alone time, which I filled with reading. These books didn't just fill time, they made me invisible. I had something to do during lunch, recess, whenever. Soon I had consumed everything within the children's section of the library I was interested in reading and graduated to the adult section.

Adult Section

I was fortunate enough not to grow up today, where this loneliness and anger might have found an online community. They would reinforce my feelings, confirming that I was in the right and everyone else was in the wrong. If they rejected me, I would have wandered until I found another group. The power of the internet is the ability to self-select for your level of depravity.

Instead, wandering the poorly lit stacks of the only library in town, I came across a book that child me couldn't walk pass. A heavy tomb that seemed to contain exactly the sort of cursed knowledge that had been kept from me my entire life. The Book of the Dead.

The version I read was an old hardcover, tucked away in a corner with a title that was too good to pass up. A book about other religions, old religions? From a Muslim country? I knew I couldn't take it home. If anyone saw me with this it would raise a lot of questions I couldn't answer. Instead I struggled through it sitting at the long wooden tables after school and on the weekends, trying to make sense of what was happening.

The text (for those that are curious: https://www.ucl.ac.uk/museums-static/digitalegypt/literature/religious/bdbynumber.html) is dense and hard to read. It took me forever to get through it, missing a lot of the meaning. I would spend days sitting there writing in my little composition notebook, looking up words and trying to parse hard to read sentences. The Book of the Dead is about two hundred "spells" or maybe "chants" would be a better way to describe it that basically take someone through the process of death. From preservation to the afterlife and finally to judgement, the soul was escorted through the process and each part was touched upon.

The part that blew my mind was the Hymn to Osiris

"(1) Hail to thee, Osiris, lord of eternity, king of the gods, thou who hast many names, thou disposer of created things, thou who hast hidden forms in the temples, thou sacred one, thou KA who dwellest in Tattu, thou mighty (2) one in Sekhem, thou lord to whom invocations are made in Anti, thou who art over the offerings in Annu, thou lord who makest inquisition in two-fold right and truth, thou hidden soul, the lord of Qerert, thou who disposest affairs in the city of the White Wall, thou soul of Ra, thou very body of Ra who restest in (3) Suten-henen, thou to whom adorations are made in the region of Nart, thou who makest the soul to rise, thou lord of the Great House in Khemennu, thou mighty of terror in Shas-hetep, thou lord of eternity, thou chief of Abtu, thou who sittest upon thy throne in Ta-tchesert, thou whose name is established in the mouths of (4) men, thou unformed matter of the world, thou god Tum, thou who providest with food the ka's who are with the company of the gods, thou perfect khu among khu's, thou provider of the waters of Nu, thou giver of the wind, thou producer of the wind of the evening from thy nostrils for the satisfaction of thy heart. Thou makest (5) plants to grow at thy desire, thou givest birth to . . . . . . . ; to thee are obedient the stars in the heights, and thou openest the mighty gates. Thou art the lord to whom hymns of praise are sung in the southern heaven, and unto thee are adorations paid in the northern heaven. The never setting stars (6) are before thy face, and they are thy thrones, even as also are those that never rest. An offering cometh to thee by the command of Seb. The company of the gods adoreth thee, the stars of the tuat bow to the earth in adoration before thee, [all] domains pay homage to thee, and the ends of the earth offer entreaty and supplication. When those who are among the holy ones (7) see thee they tremble at thee, and the whole world giveth praise unto thee when it meeteth thy majesty. Thou art a glorious sahu among the sahu's, upon thee hath dignity been conferred, thy dominion is eternal, O thou beautiful Form of the company of the gods; thou gracious one who art beloved by him that (8) seeth thee. Thou settest thy fear in all the world, and through love for thee all proclaim thy name before that of all other gods. Unto thee are offerings made by all mankind, O thou lord to whom commemorations are made, both in heaven and in earth. Many are the shouts of joy that rise to thee at the Uak[*] festival, and cries of delight ascend to thee from the (9) whole world with one voice. Thou art the chief and prince of thy brethren, thou art the prince of the company of the gods, thou stablishest right and truth everywhere, thou placest thy son upon thy throne, thou art the object of praise of thy father Seb, and of the love of thy mother Nut. Thou art exceeding mighty, thou overthrowest those who oppose thee, thou art mighty of hand, and thou slaughterest thine (10) enemy. Thou settest thy fear in thy foe, thou removest his boundaries, thy heart is fixed, and thy feet are watchful. Thou art the heir of Seb and the sovereign of all the earth;

To a child raised in a heavily Christian environment, this isn't just close to biblical writing, it's the same. The whole world praises and worships him with a father and mother and woe to his foes who challenge him? I had assumed all of this was unique to Christianity. I knew there had been other religions but I didn't know they were saying the exact same things.

As important as the text is the surrounding context the academic sources put the text in. An expert walks me through how translations work, the source of the material, how our understanding has changed over time. As a kid drawn in by a cool title, I'm learning a lot about how to intake information. I'm learning real history has citations, explanations, debates, ambiguity. Real academic writing has a style, which when I stumble across the metaphysical Egyptian magic nonsense makes it easy to spot.

The reason this book mattered is the expert human commentary. The words themselves with some basic context wouldn't have meant anything. It's by understand the amount of work that went into this translation, what it means, what it also could mean, that the importance sets in. That's the human element which creates all the value. You aren't reading old words, you are being taken on a guided tour by someone who has lived with this text for a long time and knows it up and down.

I quickly expanded, growing from this historical text to a wide range of topics. I quickly find there is someone there to meet me at every stage of life. When I'm lonely or angry as a teenager I find those authors and stories that speak to that, put those feelings into a context and bigger picture. This isn't a new experience, people have felt this way going back to the very beginning. So much of the value isn't just the words, it's the sense of a relationship between me and the author. When you encounter this in fiction or in historical text, you come to understand as overwhelming as it feels in that second it is part of being a human being. This person experienced it and lived, you will too.

You also get to experience emotions that you may never experience. A Passage to India was a book I enjoyed a lot as a teen, even though it is about the story of two British women touring around India and clashing with the colonial realities of British history. I know nothing about British culture, the 1920s, all of this is as alien to me as anything else. It's fiction but with so much historical backing you still feel like you are seeing something different, something new.

That's a powerful part of why books work. Even if you the author are just imagining those scenarios, real life bleeds in. You can make text that reads like A Farewell to Arms, but you would miss the point if you did. It's more interesting and more powerful because its Hemingway basically recanting his wartime experience through his characters (obviously pumping up the manliness as he goes). It is when writers draw on their personal lives that it hits hardest.

Instead of finding a community that reinforced how alone and sad I was in that moment, I found evidence it didn't matter. People had survived far worse and ultimately turned out to be fine. You can't read about the complex relationship of fear and respect Egyptians had with the Nile, where too little water was dead and too much was also death, then endlessly fixate on your own problems. Humanity is capable of adaptation and the promise is, so are you.

Why AI Threatens Books

As readers get older and they spend a few decades going through books, they discover authors they like and more importantly styles they like. However you also like to see some experimentation in the craft, maybe with some rough edges. To me it's like people who listen to concert recordings instead of the studio album. Maybe it's a little rougher but there is also genius there from time to time. eBooks quickly became where you found the indie gems that would later get snapped up by publishers.

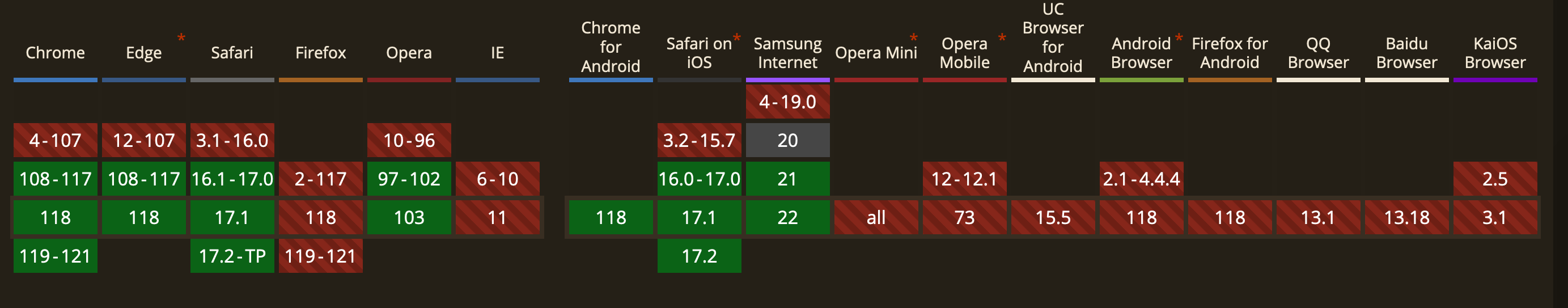

The key difference between physical and eBooks is bookstores and libraries are curated. They'll stock the shelves with things they like and things that will sell. Indie bookstores tend to veer a little more towards things they like, but in general it's not hard to tell the difference between the stack of books the staff loves and the ones they think the general population will buy. However each one had to get read by a person. That is the key difference between music or film and books.

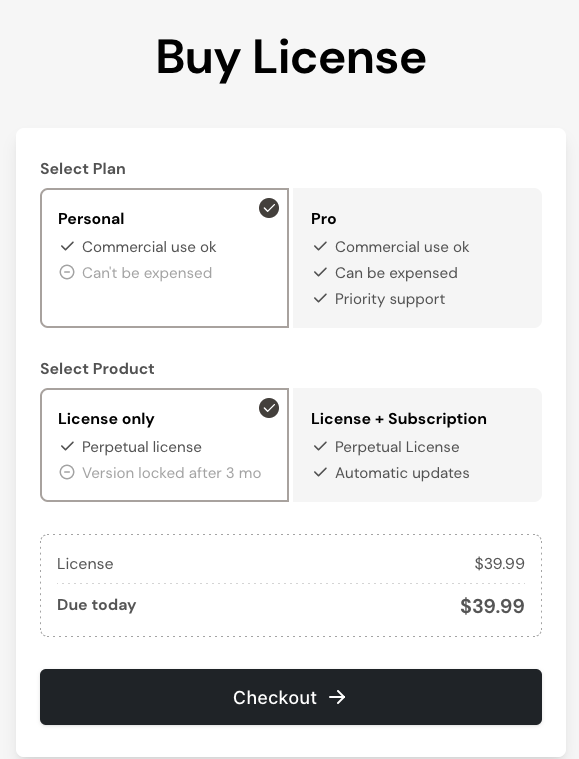

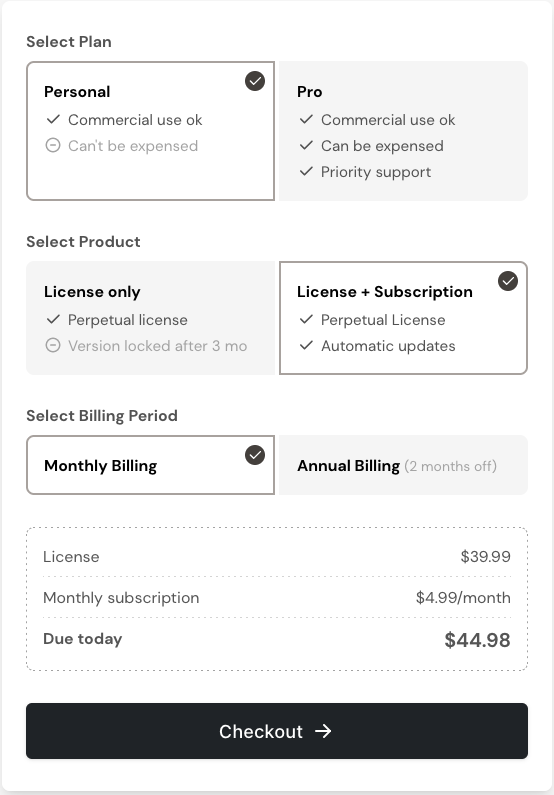

A music reviewer needs to invest between 30-60 minutes to listen to an album. A movie reviewer somewhere between 1-3 hours. An owner of a bookstore in Chicago broke down his experience pretty well:

Average person: 4 books a year if they read at all

Readers (people who consider it a hobby): 30-50 books a year

Super readers: 80 books

80 books is not a lot of books. Adult novels clock in at about 90,000 words, 200-300 words per minute reading speed, 7-8 hours to get through a book. To combat this discrepancy websites like Goodreads were popularized because frankly you cannot invest 8 hours of your life in shitty eBooks very often. At the very least your investment should hopefully scare off others considering doing the same (or at least they can make an informed choice).

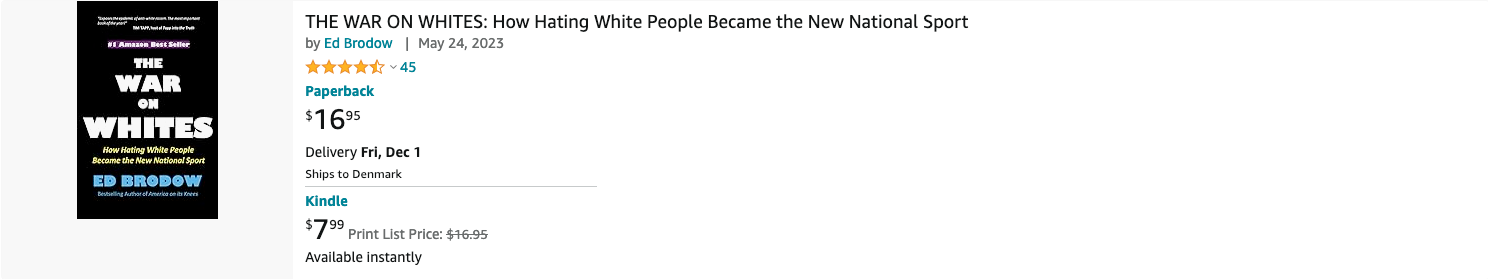

The ebook market also started to not be somewhere you wanted to wade in randomly due to the spike in New Age nonsense writing and openly racist or sexist titles. This book below was found by searching the term "war" and going to the second page. As a kid I would have had to send a money order to the KKK to get my hands on a book like this, but now it's in my virtual bookstore next to everything else. Since Amazon, despite their wealth and power, has no interest in policing their content, you are forced to solve the problem through community effort.

The reason why AI books are so devastating to this ecosystem should be obvious, but let's lay it out. It breaks the emotional connection between reader and writer and creates a sense of paranoia. Is this real or fake? In order to discover it, someone else needs to invest a full work day into reading it to figure out. Then you need to join a community with enough trusted reviewers willing to donate their time for free to tell you whether the book is good or bad. Finally you need to hope that you are a member of the right book reading community to discover the review.

So if we were barely surviving the flood of eBooks and missing tons and tons of good books, the last thing we needed was someone to crank up the volume of books shooting out into the marketplace. The chances that one of the sacred reviewers even finds a new authors book decreases, so the community won't find it and the author will see that they have no audience and will either stop writing or will ensure they don't write another book like the first book. The feedback loop, which was already breaking under the load, completely collapses.

Now that AI books exist, the probability that I will ever blind purchase another eBook on Amazon from an unknown author drops to zero. Now more than ever I entirely rely on the reviews of others. Before I might have wandered through the virtual stacks, but no more. I'm not alone in this assessment, friends and family have reported the same feeling, even if they haven't themselves been burned by an AI book they knew about.

AI books solve a problem that didn't exist, which is this presumption by tech people that what we needed was more people writing books. Instead, like so many technical solutions to problems that the architects never took any time to understand, the result doesn't help smaller players. It places all the power back into publishers and the small cadre of super reviewers since they're willing to invest the time to check for at least some low benchmark of quality.

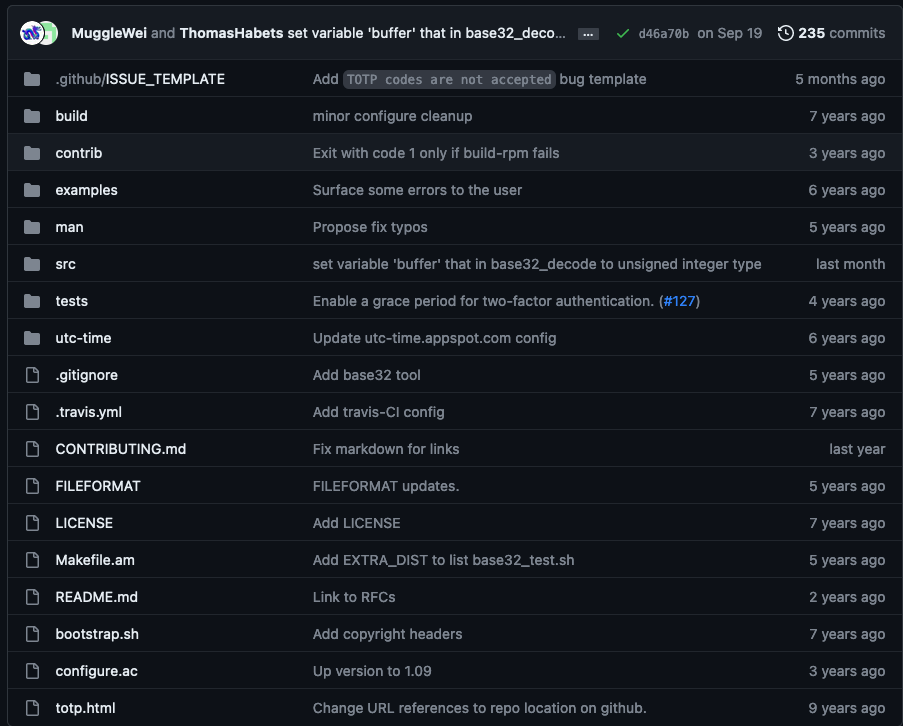

The sad part is this is unstoppable. eBooks are too easy to make with LLMs and no reliable detection systems exist to screen them before they're uploaded to the market. Amazon has no interest in setting realistic limits to how many books users can upload to the Kindle Store, still letting people upload a laughable three books a day. Google Play Store seems to have no limit, same with Apple Books. It's depressing that another market will become so crowded with trash, but nobody in a position to change it seems to care.

The Future

So where does that leave us? Well kind of back to where we started. If you are excellent at marketing and can get the name of your eBook out there, then people can go directly to it. But similar to how the App Store and Play Store are ruined for new app discoverability, it's a lopsided system which favors existing players and stacks the deck against anyone new. Publishers will still be able to get the authors to do the free market research through the eBook market and then snap up proven winners.

Since readers pay the price for this system by investing money and time into fake books, it both increases the amount of terrible out there and further incentives the push down in eBook price. If there are 600,000 "free" eBooks on Kindle Unlimited and you are trying to complete with a book that took a fraction of the time to produce, you are going to struggle to justify more than the $1.99-$2.99 price point. So not only are you selling a year (or years) of your life for the cost of a large soda, the probability of someone organically finding your book went from "bad" to "grain of sand in the ocean".

Even if there are laws, there is no chance they'll be able to make a meaningful difference unless the laws mandate that AI produced text is watermarked in some distinct way that everyone will immediately remove. So what becomes a "hard but possible" dream turns into a "attempting to become a professional athlete" level of statistical improbability. The end result will be fewer people trying so we get less good stories and instead just endlessly retread the writing of the past.