I've been working with git now full-time for around a decade now. I use it every day, relying on the command-line version primarily. I've read a book, watched talks, practiced with it and in general use it effectively to get my job done. I even have a custom collection of hooks I install in new repos to help me stay on the happy path. I should like it, based on mere exposure effect alone. I don't.

I don't feel like I can always "control" what git is going to do, with commands sometimes resulting in unexpected behavior that is consistent with the way git works but doesn't track with how I think it should work. Instead, I need to keep a lot in my mind to get it to do what I want. "Alright, I want to move unstaged edits to a new branch. If the branch doesn't exist, I want to use checkout, but if it does exist I need to stash, checkout and then stash pop." "Now if the problem is that I made changes on the wrong branch, I want stash apply and not stash pop." "I need to bring in some cross-repo dependencies. Do I want submodules or subtree?"

I need to always deeply understand the difference between reset, revert, checkout, clone, pull, fetch, cherrypick when I'm working even though some of those words mean the same thing in English. You need to remember that push and pull aren't opposites despite the name. When it comes to merging, you need to think through the logic of when you want rebase vs merge vs merge --squash. What is the direction of the merge? Shit, I accidentally deleted a file awhile ago. I need to remember git rev-list -n 1 HEAD – filename. Maybe I deleted a file and immediately realized it but accidentally committed it. git reset --hard HEAD~1 will fix my mistake but I need to remember what specifically --hard does when you use it and make sure it's the right flag to pass.

Nobody is saying this is impossible and clearly git works for millions of people around the world, but can we be honest for a second and acknowledge that this is massive overkill for the workflow I use at almost every job which looks as follows:

- Make a branch

- Push branch to remote

- Do work on branch and then make a Pull Request

- Merge PR, typically with a squash and merge cause it is easier to read

- Let CI/CD do its thing.

I've never emailed a patch or restored a repo from my local copy. I don't spend weeks working offline only to attempt to merge a giant branch. We don't let repos get larger than 1-2 GB because then they become difficult to work with when I just need to change like three files and make a PR. None of the typical workflow benefits from the complexity of git.

More specifically, it doesn't work offline. It relies on merge controls that aren't even a part of git with Pull Requests. Most of that distributed history gets thrown away when I do a squash. I don't gain anything with my local disk being cluttered up with out-of-date repos I need to update before I start working anyway.

Now someone saying "I don't like how git works" is sort of like complaining about PHP in terms of being a new and novel perspective. Let me lay out what I think would be the perfect VCS and explore if I can get anywhere close to there with anything on the market.

Gitlite

What do I think a VCS needs (and doesn't need) to replace git for 95% of usecases.

- Dump the decentralized model. I work with tons of repos, everyone works with tons of repos, I need to hit the server all the time to do my work anyway. The complexity of decentralized doesn't pay off and I'd rather be able to do the next section and lose it. If GitHub is down today I can't deploy anyway so I might as well embrace the server requirement as a perk.

- Move a lot of the work server-side and on-demand. I wanna search for something in a repo. Instead of copying everything from the repo, running the search locally and then just accepting that it might be out of date, run it on the server and tell me what files I want. Then let me ask for just those files on-demand instead of copying everything.

- I want big repos and I don't want to copy the entire thing to my disk. Just give me the stuff I want when I request it and then leave the rest of it up there. Why am I constantly pulling down hundreds of files when I work with like 3 of them.

- Pull Request as a first-class citizen. We have the concept of branches and we've all adopted the idea of checks that a branch must pass before it can be merged. Let's make that a part of the CLI flow. How great would be it to be able to, inside the same tool, ask the server to "dry-run" a PR check and see if my branch passes? Imagine taking the functionality of the

ghCLI and not making it platform specific alakubectlwith different hosted Kubernetes providers. - Endorsing and simplifying the idea of cross-repo dependencies.

submodulesdon't work the way anybody wants them to.subtreedoes but taking work and pushing it back to the upstream dependency is confusing and painful to explain to people. Instead I want something like: https://gitmodules.com/- My server keeps it in sync with the remote server if I'm pulling from a remote server but I can pin the version in my repo.

- My changes in my repo go to the remote dependency if I have permission

- If there are conflicts they are resolved through a PR.

- Build in better visualization tools. Let me kick out to a browser or whatever to more graphically explore what I'm looking at here. A lot of people use the CLI + a GUI tool to do this with

gitand it seems like something we could roll into one step. - Easier centralized commit message and other etiquette enforcement. Yes I can distribute a bunch of git hooks but it would be nice if when you cloned the repo you got all the checks to make sure that you are doing things the right way before you wasted a bunch of time only to get caught by the CI linter or commit message format checker. I'd also love some prompts like "hey this branch is getting pretty big" or "every commit must be of a type fix/feat/docs/style/test/ci whatever".

- Read replica concept. I'd love to be able to point my CI/CD systems at a read replica box and preserve my primary VCS box for actual users. Primary server fires a webhook that triggers a build with a tag, hits the read replica which knows to pull from the primary if it doesn't have that tag. Be even more amazing if we could do some sort of primary/secondary model where I can set both in the config and if primary (cloud provider) is down I can keep pushing stuff up to somewhere that is backed up.

So I tried out a few competitors to see "is there any system moving more towards this direction".

SVN in 2024

My first introduction to version control was SVN (Subversion), which was pitched to me as "don't try to make a branch until you've worked here a year". However getting SVN to work as a newbie was extremely easy because it doesn't do much. Add, delete, copy, move, mkdir, status, diff, update, commit, log, revert, update -r, co -r were pretty much all the commands you needed to get rolling. Subversion has a very simple mental model of how it works which also assists with getting you started. It's effectively "we copied stuff to a file server and back to your laptop when you ask us to".

I have to say though, svn is a much nicer experience than I remember. A lot of the rough edges seem to have been sanded down and I didn't hit any of the old issues I used to. Huge props to the Subversion team for delivering great work.

Subversion Basics

Effectively your Subversion client commits all your files as a single atomic transaction to the central server as the basic function. Whenever that happens, it creates a new version of the whole project, called a revision. This isn't a hash, it's just a number starting at zero, so there's no confusion as a new user what is the "newer" or "older" thing. These are global numbers, not tied to a file, so it's the state of the world. For each individual file there are 4 states it can be in:

- Unchanged locally + current remote: leave it alone

- Locally changed + current remote: to publish the change you need to commit it, an update will do nothing

- Unchanged locally + out of date remotely:

svn updatewill merge the latest copy into your working copy - Locally changed + out of date remotely:

svn commitwon't work,svn updatewill try to resolve the problem but if it can't then the user will need to figure out what to do.

It's nearly impossible to "break" SVN because pushing up doesn't mean you are pulling down. This means different files and directories can be set to different revisions, but only when you run svn update does the whole world true itself up to the latest revision.

Working with SVN looks as follows:

- Ensure you are on the network

- Run

svn updateto get your working copy up to latest - Make the changes you need, remembering not to use OS tooling to move or delete files and instead use

svn copyandsvn moveso it knows about the changes. - Run

svn diffto make sure you want to do what you are talking about doing - Run

svn updateagain, resolve conflicts withsvn resolve - Feeling good? Hit

svn commitand you are done.

Why did SVN get dumped then? One word: branches.

SVN Branches

In SVN a branch is really just a directory that you stick into where you are working. Typically you do it as a remote copy and then start working with it, so it looks more like you are copying the URL path to a new URL path. But to users they just look like normal directories in the repository that you've made. Before SVN 1.4 merging a branch required a masters degree and a steady hand, but they added an svn merge which made it a bit easier.

Practically you are using svn merge against the main to keep your branch in sync and then when you are ready to go, you run svn merge --reintegrate to push the branch to master. Then you can delete the branch, but if you need to read the log the URL of the branch will always work to read the log of. This was particularly nice with ticket systems where the URL was just the ticket number. But you don't need to clutter things up forever with random directories.

In short a lot of the things that used to be wrong with svn branches aren't anymore.

What's wrong with it?

So SVN breaks down IME when it comes to automation. You need to make it all yourself. While you do you have nuanced access control over different parts of a repo, in practice this wasn't often valuable. What you don't have is the ability to block someone from merging in a branch without some sort of additional controls or check. It also can place a lot of burden on the SVN server since nobody seems to ever update them even when you add a lot more employees.

Also the UIs are dated and the entire tooling ecosystem has started to rot from users leaving. I don't know if I could realistically recommend someone jump from git to svn right now, but I do think it has a lot of good ideas that moves us closer to what I want. It would just need a tremendous amount of UI/UX investment in terms of web to get it to where I would like using it over git. But I think if someone was interested in that work, the fundamental "bones" of Subversion are good.

Sapling

One thing I've heard from every former Meta engineer I've worked with is how much they miss their VCS. Sapling is that team letting us play around with a lot of those pieces, adopted for a more GitHub-centric world. I've been using it for my own personal stuff for a few months and have really come to fall in love with it. It feels like Sapling is specifically designed to be easy to understand, which is a delightful change.

A lot of the stuff is the same. You clone with sl clone, you check the status with sl status and you commit with sl commit. The differences that immediately stick out are the concept of stacks and the concept of the smartlog. So stacks are "collections of commits" and the idea is that from the command line I can issue PRs for those changes with sl pr submit with each GitHub PR being one of the commits. This view (obviously) is cluttered and annoying, so there's another tool that helps you see the changes correctly which is ReviewStack.

None of this makes a lot of sense unless I show you what I'm talking about. I made a new repo and I'm adding files to it. First I check the status:

❯ sl st

? Dockerfile

? all_functions.py

? get-roles.sh

? gunicorn.sh

? main.py

? requirements.in

? requirements.txtThen I add the files:

sl add .

adding Dockerfile

adding all_functions.py

adding get-roles.sh

adding gunicorn.sh

adding main.py

adding requirements.in

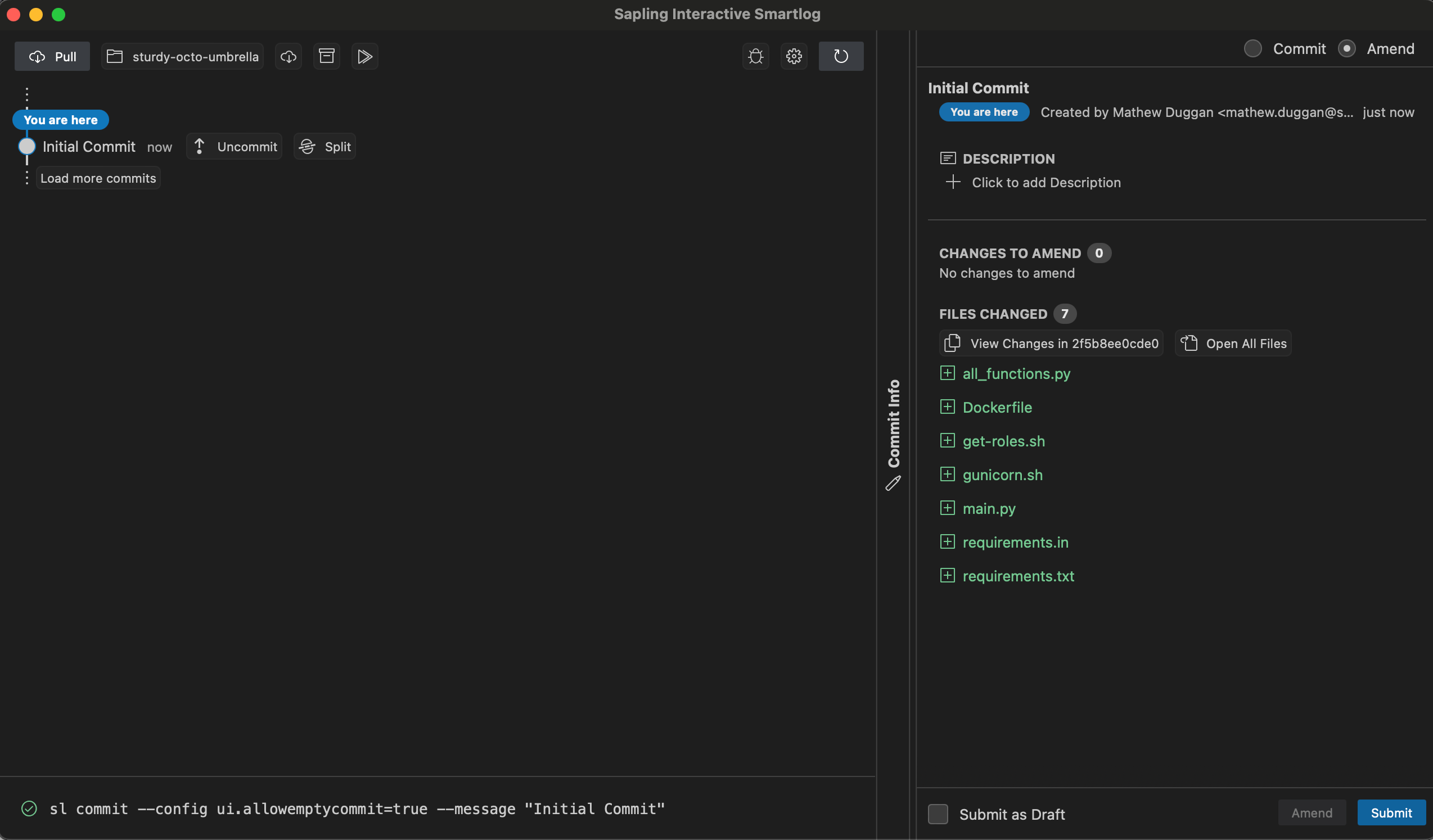

adding requirements.txtIf I want a nicer web UI running locally, I run sl web and get this:

So I added all those files in as one Initial Commit. Great, let's add some more.

❯ sl

@ 5a23c603a 4 seconds ago mathew.duggan

│ feat: adding the exceptions handler

│

o 2652cf416 17 seconds ago mathew.duggan

│ feat: adding auth

│

o 2f5b8ee0c 9 minutes ago mathew.duggan

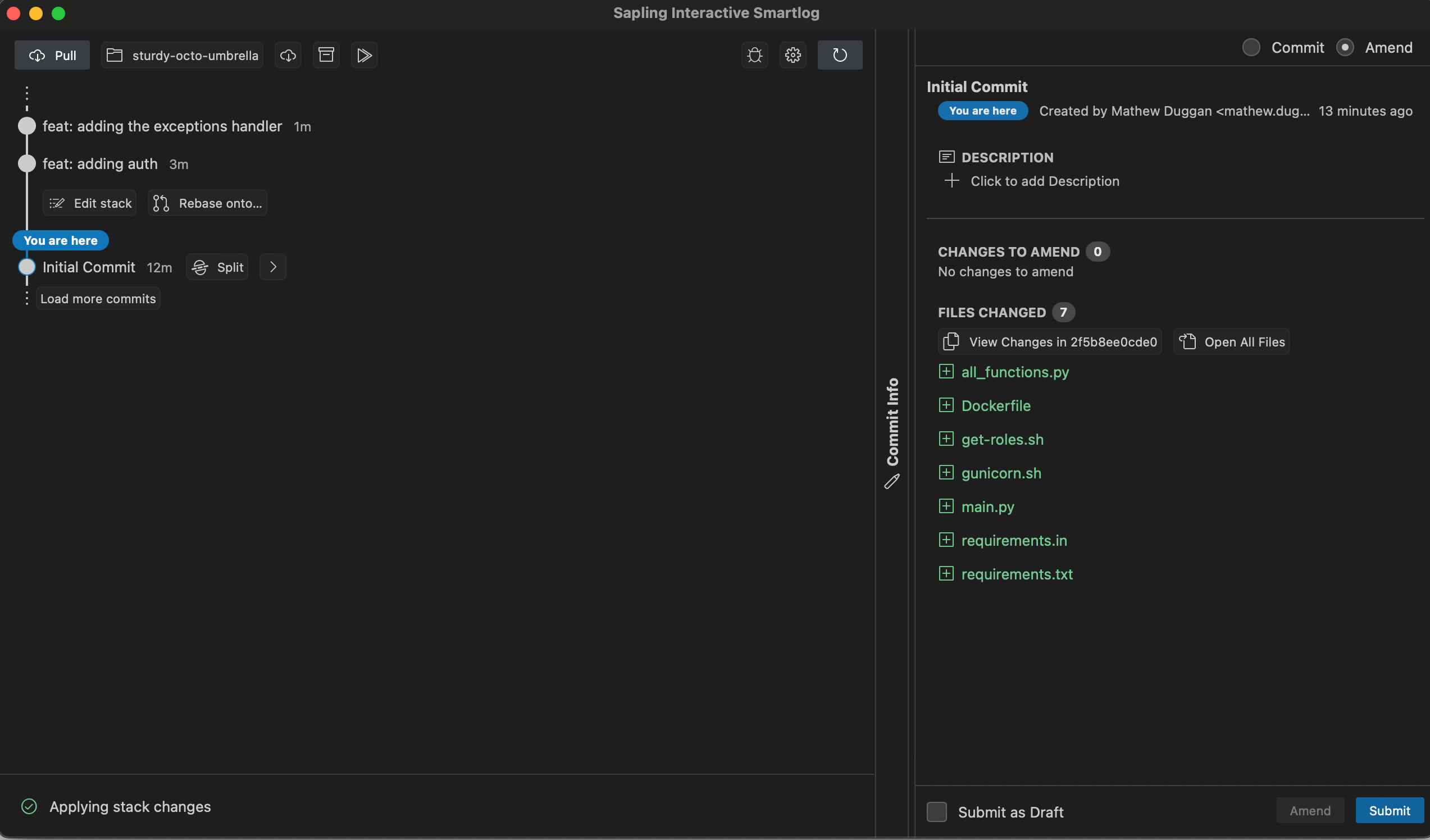

Initial CommitNow if I want to navigate this stack, I can just use sl prev which moves me up and down the stack:

sl prev 1

0 files updated, 0 files merged, 1 files removed, 0 files unresolved

[2f5b8e] Initial CommitAnd that is also represented in my sl output

❯ sl

o 5a23c603a 108 seconds ago mathew.duggan

│ feat: adding the exceptions handler

│

o 2652cf416 2 minutes ago mathew.duggan

│ feat: adding auth

│

@ 2f5b8ee0c 11 minutes ago mathew.duggan

Initial CommitThis also shows up in my local web UI

Finally the flow ends with sl pr to create Pull Requests. They are GitHub Pull Requests but they don't look like normal GitHub pull requests and you don't want to review them the same way. The tool you want to use for this is ReviewStack.

Why I like it

Sapling lines up with what I expect a VCS to do. It's easier to see what is going on, it's designed to work with a large team and it surfaces the information I want in a way that makes more sense. The commands make more sense to me and I've never found myself unable to do something I needed to do.

More specifically I like throwing away the idea of branches. What I have is a collection of commits that fork off from the main line of development, but I don't have a distinct thing I want named that I'm asking you to add. I want to take the main line of work and add a stack of commits to it and then I want someone to look at that collection of commits and make sure it makes sense and then run automated checks against it. The "branch" concept doesn't do anything for me and ends up being something I delete anyway.

I also like that it's much easier to undo work. This is something where I feel like git makes it really difficult to handle and uncommit, unamend, unhide, and undo in Sapling just work better for me and always seem to result in the behavior that I expected. Losing the staging area and focusing more on easy to use commands is a more logical design.

Why you shouldn't switch

If I love Sapling so much, what's the problem? So to get Sapling to the place I actually want it to be, I need more of the Meta special sauce running. Sapling works pretty well on top of GitHub, but what I'd love is to get:

- Mononoke, which is the server-side component of Sapling (https://github.com/facebook/sapling/blob/main/eden/mononoke/README.md)

- EdenFS (https://github.com/facebook/sapling/blob/main/eden/fs/docs/Overview.md)

These seem to be the pieces to get all the goodness of this system as outlined below

- On-demand historical file fetching (remotefilelog, 2013)

- File system monitor for faster working copy status (watchman, 2014)

- In-repo sparse profile to shrink working copy (2015)

- Limit references to exchange (selective pull, 2016)

- On-demand historical tree fetching (2017)

- Incremental updates to working copy state (treestate, 2017)

- New server infrastructure for push throughput and faster indexes (Mononoke, 2017)

- Virtualized working copy for on-demand currently checked out file or tree fetching (EdenFS, 2018)

- Faster commit graph algorithms (segmented changelog, 2020)

- On-demand commit fetching (2021)

I'd love to try all of this together (and since there is source code for a lot of it, I am working on trying to get it started) but so far I don't think I've been able to see the full Sapling experience. All these pieces together would provide a really interesting argument for transitioning to Sapling but without them I'm really tacking a lot of custom workflow on top of GitHub. I think I could pitch migrating wholesale from GitHub to something else, but Meta would need to release more of these pieces in an easier to consume fashion.

Scalar

Alright so until Facebook decided to release the entire package end to end, Sapling exists as a great stack on top of GitHub but not something I could (realistically) see migrating a team to. Can I make git work more the way I want to? Or at least can I make it less of a pain to manage all the individual files?

Microsoft has a tool that does this, VFS for Git, but it's Windows only so that does nothing for me. However they also offer a cross-platform tool called Scalar that is designed to "enable working with large repos at scale". It was originally a Microsoft technology and was eventually moved to git proper, so maybe it'll do what I want.

What scalar does is effectively set all the most modern git options for working with a large repo. So this is the built-in file-system monitor, multi-pack index, commit graphs, scheduled background maintenance, partial cloning, and clone mode sparse-checkout.

So what are these things?

- The file system monitor is FSMonitor, a daemon that tracks changes to files and directories from the OS and adds them to a queue. That means

git statusdoesn't need to query every file in the repo to find changes. - Take the

gitpack directory with a pack file and break it into multiples. - Commit graphs which from the docs:

- " The commit-graph file stores the commit graph structure along with some extra metadata to speed up graph walks. By listing commit OIDs in lexicographic order, we can identify an integer position for each commit and refer to the parents of a commit using those integer positions. We use binary search to find initial commits and then use the integer positions for fast lookups during the walk."

- Finally clone mode sparse-checkout. This allows people to limit their working directory to specific files

The purpose of this tool is to create an easy-mode for dealing with large monorepos, with an eye towards monorepos that are actually a collection of microservices. Ok but does it do what I want?

Why I like it

Well it's already built into git which is great and it is incredibly easy to use and get started with. Also it does some of what I want. Taking a bunch of existing repos and creating one giant monorepo, the performance was surprisingly good. The sparse-checkout means I get to designate what I care about and what I don't and also solves the problem of "what if I have a giant directory of binaries that I don't want people to worry about" since it follows the same pattern matching as .gitignore

Now what it doesn't do is radically change what git is. You could grow a repo to much much larger with these defaults set, but it's still handling a lot of things locally and requiring me to do the work. However I will say it makes a lot of my complaints go away. Combined with the gh CLI tool for PRs and I can cobble together a reasonably good workflow that I really like.

So while this is definitely the pattern I'm going to be adopting from now on (monorepo full of microservices where I manage scale with scalar), I think it represents how far you can modify git as an existing platform. This is the best possible option today but it still doesn't get me to where I want to be. It is closer though.

You can try it out yourself: https://git-scm.com/docs/scalar

Conclusion

So where does this leave us? Honestly, I could write another 5000 words on this stuff. It feels like as a field we get maddeningly close to cracking this code and then give up because we hit upon a solution that is mostly good enough. As workflows have continued to evolve, we haven't come back to touch this third rail of application design.

Why? I think the people not satisfied with git are told that is a result of them not understanding it. It creates a feeling that if you aren't clicking with the tool, then the deficiency is with you and not with the tool. I also think programmers love decentralized designs because it encourages the (somewhat) false hope of portability. Yes I am entirely reliant on GitHub actions, Pull Requests, GitHub access control, SSO, secrets and releases but in a pinch I could move the actual repo itself to a different provider.

Hopefully someone decides to take another run at this problem. I don't feel like we're close to done and it seems like, from playing around with all these, that there is a lot of low-hanging optimization fruit that anyone could grab. I think the primary blocker would be you'd need to leave git behind and migrate to a totally different structure, which might be too much for us. I'll keep hoping it's not though.

Corrections/suggestions: https://c.im/@matdevdug