So awhile ago I purchased a Tp-link AX3000 wireless router as a temporary same-day fix to a dying AP. Of course, like all temporary fixes, this one ended up being super permanent. It's a fine wireless router, nothing interesting to report, but one of the features I stumbled upon when I was clicking around the webUI seemed like a great solution for a place to stick random files.

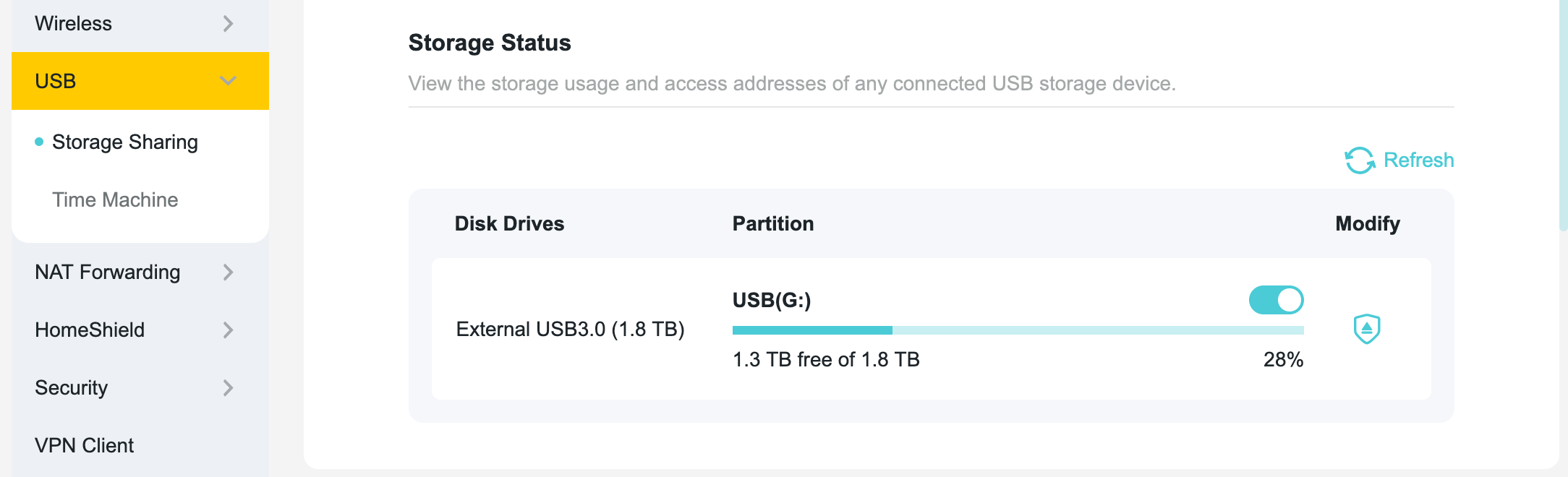

Inside of Advanced Settings, you'll see this pane:

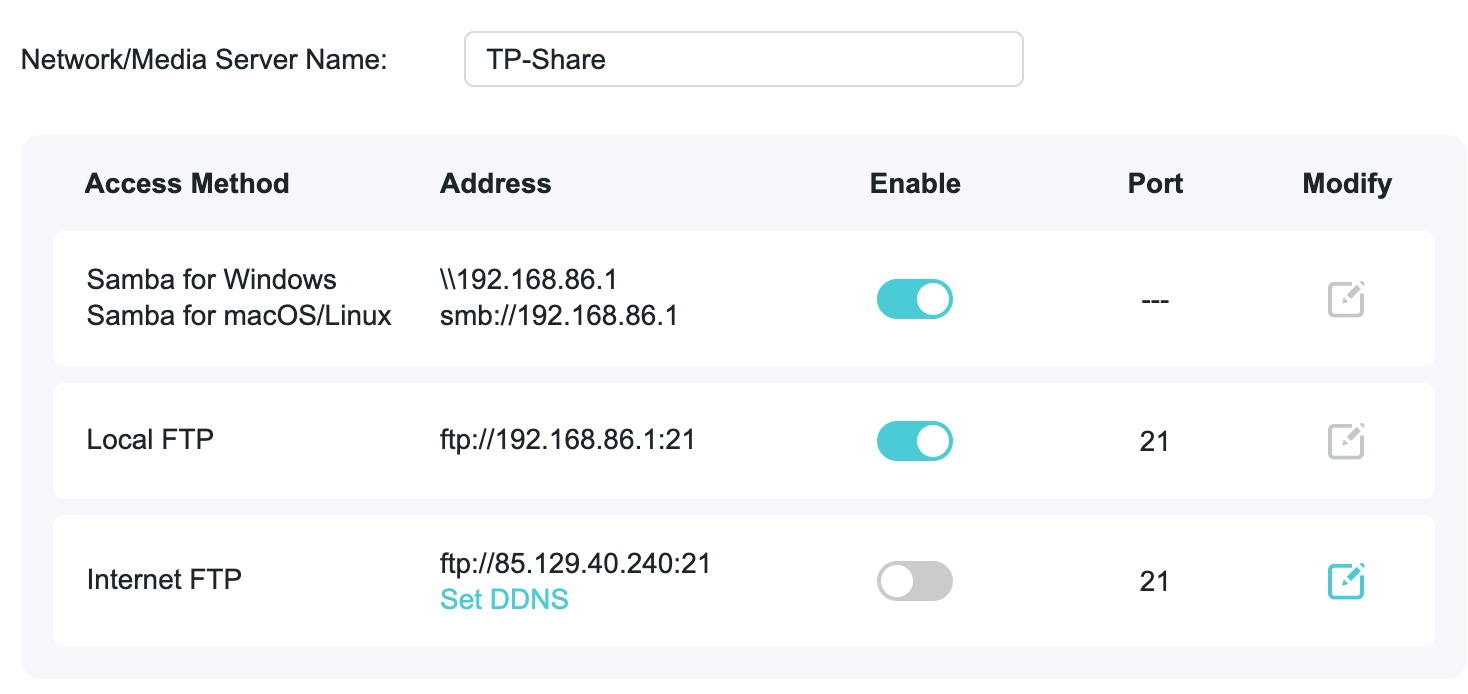

You have a few options for how to expose this USB drive:

I actually didn't find the SMB to work that well in my testing, seemingly disconnecting all the time. But FTP works pretty well. So that's what I ended up using, which was fine except seemingly randomly files were getting corrupted when I moved them over.

FAT32 will never die

Looking at the failing files, I realized they were all over 4 GB and thought "there's no way in 2025 they are formatting this external drive in FAT32, right?" To be clear, I didn't partition this drive. The router offered to wipe it when I plugged it in and I said sure.

However that is exactly what they are doing, which means we have a file size limit of 4 GB per file. This explained the transfer problems and, while still annoying, is not a complicated thing to work around.

Script to transfer stuff to the local FTP

Notes:

- LOCAL_DIR will obviously need to get changed

- FTP_HOST has a different IP range than the default router range because of specific stuff for me. You'll need to check that.

- FTP_PASS required an email address format. I don't know why.

- The directory of "G" was assigned to me by the router, so I assume this is a common convention with these routers. I don't know why it puts a directory inside of the drive instead of writing out to the root of the drive. Presumably some Windows convention.

#!/usr/bin/env python3

import os

import ftplib

import hashlib

import sys

import time

# --- Configuration ---

# Adjust these settings to match your environment

LOCAL_DIR = "/mnt/usb/test"

FTP_HOST = "192.168.86.1"

FTP_USER = "anonymous"

FTP_PASS = "[email protected]"

FTP_TARGET_DIR = "G"

# Do not transfer files larger than this size (4 GiB - 1 byte).

MAX_FILE_SIZE = 4294967295

# The size of chunks to use for reading and hashing files.

CHUNK_SIZE = 8192

# --- Helper Functions ---

def get_file_hash(file_path):

sha256 = hashlib.sha256()

try:

with open(file_path, "rb") as f:

# Read the file in chunks to handle large files efficiently

for byte_block in iter(lambda: f.read(CHUNK_SIZE), b""):

sha256.update(byte_block)

return sha256.hexdigest()

except IOError as e:

print(f" - Error reading file for hashing: {e}")

return None

def ftp_makedirs(ftp, path):

"""Recursively creates a directory structure on the FTP server."""

parts = path.strip('/').split('/')

current_dir = ''

for part in parts:

current_dir += '/' + part

try:

ftp.mkd(current_dir)

print(f" - Created remote directory: {current_dir}")

except ftplib.error_perm as e:

# Error 550 often means the directory already exists.

if "550" in str(e):

pass

else:

print(f" - FTP error while creating directory {current_dir}: {e}")

raise

def upload_and_verify(ftp, local_path, remote_filename):

"""

Uploads a file, verifies its integrity via hashing, and deletes

the local file upon successful verification.

"""

print(f" - Calculating hash for local file: {local_path}")

local_hash = get_file_hash(local_path)

if not local_hash:

return False

print(f" - Uploading to '{ftp.pwd()}/{remote_filename}'...")

try:

with open(local_path, 'rb') as f:

ftp.storbinary(f'STOR {remote_filename}', f, CHUNK_SIZE)

print(" - Upload complete.")

except ftplib.all_errors as e:

print(f" - !!! Upload failed: {e}")

return False

print(" - Verifying remote file integrity...")

remote_hash = ""

try:

sha256_remote = hashlib.sha256()

ftp.retrbinary(f'RETR {remote_filename}', sha256_remote.update, CHUNK_SIZE)

remote_hash = sha256_remote.hexdigest()

except ftplib.all_errors as e:

print(f" - !!! Verification failed. Could not download remote file: {e}")

return False

# 4. Compare hashes and delete local file if they match

print(f" - Local Hash: {local_hash}")

print(f" - Remote Hash: {remote_hash}")

if local_hash == remote_hash:

print(" - ✅ Integrity check PASSED. Hashes match.")

try:

os.remove(local_path)

print(f" - Successfully deleted local file: {local_path}")

return True

except OSError as e:

print(f" - !!! Error deleting local file: {e}")

return False

else:

print(" - ❌ Integrity check FAILED. Hashes do not match.")

print(" - Deleting corrupt file from FTP server...")

try:

ftp.delete(remote_filename)

print(f" - Remote file '{remote_filename}' deleted.")

except ftplib.all_errors as e:

print(f" - !!! Could not delete corrupt remote file: {e}")

return False

def main():

print("Starting FTP transfer process...")

if not os.path.isdir(LOCAL_DIR):

print(f"Error: Local directory '{LOCAL_DIR}' does not exist.")

sys.exit(1)

print("\n--- Pass 1: Scanning for oversized files ---")

dirs_to_skip = set()

for root, _, files in os.walk(LOCAL_DIR):

for filename in files:

file_path = os.path.join(root, filename)

try:

if os.path.getsize(file_path) > MAX_FILE_SIZE:

print(f"Oversized file found: {file_path}")

print(f" -> Marking directory for skipping: {root}")

dirs_to_skip.add(root)

break

except OSError as e:

print(f"Could not access {file_path}: {e}")

print("--- Pass 1 Complete ---")

# --- Pass 2: Connect to FTP and transfer valid files ---

print("\n--- Pass 2: Connecting to FTP and transferring files ---")

try:

with ftplib.FTP(FTP_HOST, timeout=30) as ftp:

ftp.login(FTP_USER, FTP_PASS)

print(f"Successfully connected to {FTP_HOST}.")

# Create and change to the base target directory

ftp_makedirs(ftp, FTP_TARGET_DIR)

ftp.cwd(FTP_TARGET_DIR)

print(f"Changed to remote base directory: {ftp.pwd()}")

for root, dirs, files in os.walk(LOCAL_DIR, topdown=True):

# Check if the current directory is in our skip list

if any(root.startswith(skip_dir) for skip_dir in dirs_to_skip):

print(f"\n⏭️ Skipping directory and its contents: {root}")

dirs[:] = [] # Prune subdirectories from the walk

continue

print(f"\nProcessing directory: {root}")

# Create the corresponding directory structure on the FTP server

remote_subdir = os.path.relpath(root, LOCAL_DIR)

remote_path = ftp.pwd()

if remote_subdir != '.':

remote_path = os.path.join(ftp.pwd(), remote_subdir).replace("\\", "/")

ftp_makedirs(ftp, remote_path)

# Process each file in the current valid directory

for filename in files:

local_file_path = os.path.join(root, filename)

if os.path.getsize(local_file_path) > MAX_FILE_SIZE:

continue

print(f"\n📂 Processing file: {filename}")

ftp.cwd(remote_path)

upload_and_verify(ftp, local_file_path, filename)

except ftplib.all_errors as e:

print(f"\nFTP Error: {e}")

except Exception as e:

print(f"\nAn unexpected error occurred: {e}")

print("\nProcess complete.")

if __name__ == "__main__":

main()Now I have an easy script that I can use to periodically offload directories full of files that I don't know if I want to delete yet, but I don't want on my laptop.