One interesting thing about the contrast between infrastructure and security is the expectation of open-source software. When a common problem arises we all experience, a company will launch a product to solve this problem. In infrastructure, typically the core tool is open-source and free to use, with some value-add services or hosting put behind licensing and paid support contracts. On the security side, the expectation seems to be that the base technology will be open-source but any refinement is not. If I find a great tool to manage SSH certificates, I have to pay for it and I can't see how it works. If I rely on a company to handle my login, I can ask for their security audits (sometimes) but the actual nuts and bolts of "how they solved this problem" is obscured from me.

Instead of "building on the shoulders of giants", it's more like "You've never made a car before. So you make your first car, load it full of passengers, send it down the road until it hits a pothole and detonates." Then someone will wander by and explain how what you did was wrong. People working on their first car to send down the road become scared because they have another example of how to make the car incorrectly, but are not that much closer to a correct one given the nearly endless complexity. They may have many examples of "car" but they don't know if this blueprint is a good car or a bad car (or an old car that was good and is now bad).

In order to be good at security, one has to see good security first. I can understand in the abstract how SSH certificates should work, but to implement it I would have to go through the work of someone with a deep understanding of the problem to grasp the specifics. I may understand in the abstract how OAuth works, but the low level "how do I get this value/store it correctly/validate it correctly" is different. You can tell me until you are blue in the face how to do logins wrong, but I have very few criteria by which I can tell if I am doing it right.

To be clear there is no shortage of PDFs and checklists telling me how my security should look at an abstract level. Good developers will look at those checklists, look at their code, squint and say "yeah I think that makes sense". They don't necessarily have the mindset of "how do I think like someone attempting to break this code", in part because they may have no idea how the code works. Their code presents the user a screen, they receive a token, that token is used for other things and they got an email address in the process. The massive number of moving parts they just used is obscured from them, code they'll never see.

Just to do session cookies correctly, you need to know about and check the following things:

- Is the expiration good and are you checking it on the server?

- Have you checked that you never send the Cookie header back to the client and break the security model? Can you write a test for this? How time consuming will that test be?

- Have you set the

Secureflag? Did you set theSameSiteflag? Can you use theHttpOnlyflag? Did you set it? - Did you scope the domain and path?

- Did you write checks to ensure you aren't logging or storing the cookies wrong?

That is so many places to get just one thing wrong.

We have to come up with a better way of throwing flares up in peoples way. More aggressive deprecation, more frequent spec bumps, some way of communicating to people "the way you have done things is legacy and you should look at something else". On the other side we need a way to say "this is a good way to do it" and "that is a bad way to do it" with code I can see. Pen-testing, scanners, these are all fine, but without some concept of "blessed good examples" it can feel like patching a ship in the dark. I closed that hole, but I don't know how many more there are until a tool or attacker finds it.

I'm gonna go through four examples of critical load-bearing security-related tooling or technology that is set up wrong by default or very difficult to do correctly. This is stuff everyone gets nervous when they touch because it doesn't help you set it up right. If we want people to do this stuff right, the spec needs to be more opinionated about right and wrong and we need to show people what right looks like on a code level.

SSH Keys

This entire field of modern programming is build on the back of SSH keys. Starting in 1995 and continuing now with OpenSSH, the protocol uses an asymmetric encryption process with the Diffie-Hellman (DH) key exchange algorithm to form a shared secret key for the SSH connection. SFTP, deploying code from CI/CD systems, accessing servers, using git, all of this happens largely on the back of SSH keys. Now you might be thinking "wait, SSH keys are great".

At a small scale SSH is easy and effortless. ssh-keygen -t rsa, select where to store it and if you want a passphrase. ssh-copy-id username@remoteserverip to move it to the remote box, assuming you set up the remote box with cloud-init or ansible or whatever. At the end of every ssh tutorial there is a paragraph that reads something like the following: "please ensure you rotate, audit and check all SSH keys for permissions". This is where things get impossible.

SSH keys don't help administrators do the right thing. Here's all the things I don't know about the SSH key I would need to know to do it correctly:

- When was the key made? Is this a new SSH key or are they reusing a personal one or one from another job? I have no idea.

- Was this key secured with a passhrase? Again like such a basic thing, can I ensure all the keys on my server were set up with a passphrase? Like just include some flag on the public key that says "yeah the private key has a passphrase". I understand you could fake it but the massive gain in security for everyone outweighs the possibility that someone manipulates a public key to say "this has a passphrase".

- Expiration. I need a value that I can statelessly query to say "is this public key expired or not" and also to check when enrolling public keys "does this key live too long".

This isn't just a "what-if" conversation. I've seen this and I bet you have too, or would if you looked at your servers.

- Many keys on servers are unused and represent access that was never properly terminated or shouldn't have been granted. I find across most jobs it's like 10% of the keys that ever get used.

- Nobody knows who has the corresponding private keys. We understand the user who made them, but we don't know where they are now.

- Alright so we use certificates! Well except they're special to OpenSSH, make auditing SSH key based access impossible since you don't know what keys the server will accept by looking at it and all the granting and revoking tooling is on you to build.

OpenSSH Certificates solves almost all these problems. You get expiration, limiting commands, limit IP address etc. It's a step forward but we're not using them in small and medium orgs due to the complexity of setup and we need to port some of these security concerns down the chain. It's exactly what I was talking about in the beginning. The default experience is terrible because backwards compatibility and for those 1% who know of the existence of SSH Certificates and can operationally support the creation of this mission-critical tooling, they reap the benefits.

So sure if I set up all of the infrastructure to do all the pieces, I can enforce ssh key rotation. I'll check the public key into object storage, sync it with all my servers, check the date the key was entered and remove it after a certain date. But seriously? We can't make a new version of the SSH key with some metadata? The entire internet operates off SSH keys and they're a half-done idea, fixed through the addition of certificates nobody uses cause writing the tooling to handle the user certificate process is a major project where if you break it, you can't get into the box.

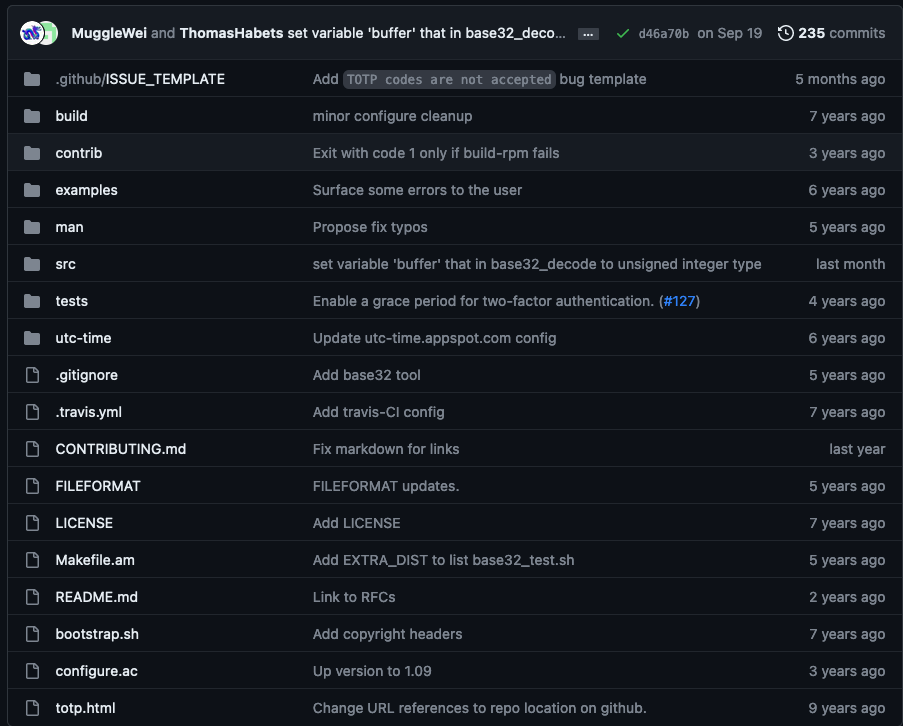

This is a crazy state of affairs. We know SSH keys live in infrastructure forever, we know they're used for way too long all over the place and we know the only way to enforce rotation patterns is through the use of expiration. We also know that passphrases are absolutely essential for the use of keys. Effectively to use SSH keys you need to stick a PAM in there to enforce 2FA like libpam-google-authenticator. BTW, talking about "critical infrastructure not getting a ton of time", this is the repo of the package every tutorial recommends. Maybe nothing substantial has happened in 3 years but feels a little unlikely.

Mobile Device Management/Device Scanning/Network MITM Scanning

Nothing screams "security theater" to me like the absolutely excessive MDM that has come to plague major companies. I have had the "joy" of working for 3 large companies that went all-in on this stuff and each time have gotten the pleasure of rip your hair out levels of frustration. I'm not an admin on my laptop, so now someone who has no idea what my job is or what I need to do it gets to decide what software I get to install. All my network traffic gets scanned, so forget privacy on the device. At random intervals my laptop becomes unusable because every file on the device needs to "get scanned" for something.

Now in theory the way this stuff is supposed to work is a back and forth between security, IT and the users. In practice it's a one-way street that once the stupid shit gets bought and turned on, it never gets turned off. All of the organizational incentives are there to keep piling this worthless crap on previously functional machines and then almost dare the employee to get any actual work done. It just doesn't make any sense to take this heavy of a hand with this stuff.

What about stuff exploiting employee devices?

I mean if you have a well-researched paper which shows that this stuff actually makes a difference, I'd love to see it. Mostly it seems from my reading like vendors repeating sales talking points to IT departments until they accept it as gospel truth, mixed with various audits requiring the tooling be on. Also we know from recent security exploits that social engineering against IT Helpdesk is a new strategy that is paying off, so assuming your IT pros will catch the problems that normal users won't is clearly a flawed strategy.

The current design is so user-hostile and so aggressively invasive that there is just no way to think of it other than "my employer thinks I'm an idiot". So often in these companies you are told the strategies to work around stuff. I once worked with a team where everybody used a decommissioned desktop tucked away in a closet connected to an Ethernet port with normal internet access to do actual work. They were SSHing into it from their locked-down work computers because they didn't have to open a ticket every time they needed to do everything and hid the desktops existence from IT.

I'm not blaming the people turning it on

The incentives here are all wrong. There's no reward in security for not turning on the annoying or invasive feature so rank and file people are happy. On the off chance that is the vector by which you are attacked, you will be held responsible for that decision. So why not turn it all on? I totally understand it, especially when we all know every company has a VIP list of people for whom this shit isn't turned on, so the people who make the decisions about this aren't actually bearing the cost of it being on.

"Don't use your work laptop for personal stuff": hey before you hit me up with this gem, save it. I spend too many hours of my life at work to never have the two overlap. I need to write emails, look up stuff, schedule appointments, so just take this horrible know-it-all attitude and throw it away. People use work devices for personal stuff and telling them not to is a waste of oxygen.

JWTs

You have users and you have services. The users need to access the things they are allowed to access, the services need to be able to talk to each other and share information in a way where you know the information wasn't tampered with. It's JSON, but special limited edition JSON. You have a header, which says what it is (a JWT) and the signing algorithm being used.

{

"alg": "HS256",

"typ": "JWT"

}You have a payload with claims. There are predefined (still optional) claims and then public and private claims. So here are some common ones:

- "iss" (Issuer) Claim: identifies the principal that issued the JWT

- "sub" (Subject) Claim: The "sub" (subject) claim identifies the principal that is the subject of the JWT.

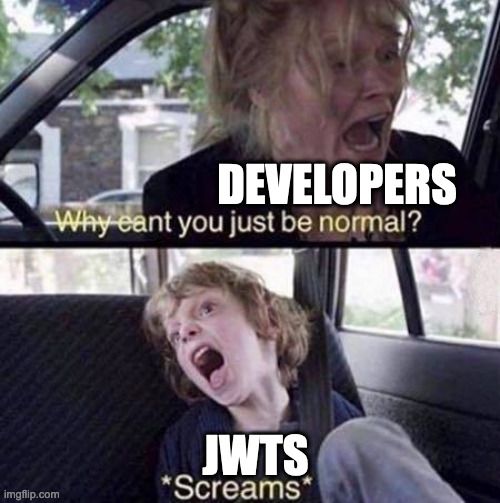

You can see them all here. The diagram below shows the design source

Seems great. What's the problem?

See that middle part where both things need access to the same secret key? That's the problem. The service that makes the JWT and the service that verifies the JWT are both reading and using the same key, so there's nothing stopping me from making my own JWT with new insane permissions on application 2 and having it get verified. That's only the beginning of the issues with JWTs. This isn't called out to people, so when you are dealing with micro-services or multiple APIs where you pass around JWTs, often there is an assumption of security where one doesn't exist.

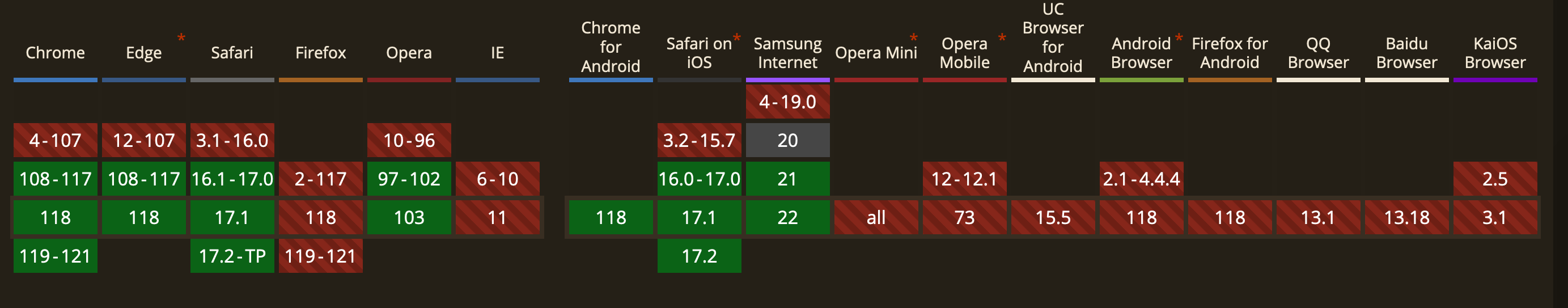

Asymmetric JWT implementations exist and work well, but so often people do not think about it or realize such an option exists. There is no reason to keep on-boarding people with this default dangerous design assuming they will "figure out" the correct way to do things later. We see this all over the place with JWTs though.

- Looking at the

algclaim in the header and using it rather than hardcoding the algorithm that your application uses. Easy mistake to make, I've seen it a lot. - Encryption vs signatures. So often with JWTs people think the payload is encrypted. Can we warn them to use JWEs? This is such a common misunderstanding among people starting with JWTs it seems insane to me to not warn people somehow.

- Should I use a JWT? Or a JWE? Should I sign AND encrypt the thing where the JWS (the signed version of the JWT) is the encrypted payload of the JWE? Are normal people supposed to make this decision?

- Who in the hell said

noneshould be a supported algorithm? Are you drunk? Just don't let me use a bad one. ("Well it is the right decision for my app because the encryption channel means the JWT doesn't matter" "Well then don't check the signature and move on if you don't care.") several Javascript Object Signing and Encryption (JOSE) libraries fail to validate their inputs correctly when performing elliptic curve key agreement (the "ECDH-ES" algorithm). An attacker that is able to send JWEs of its choosing that use invalid curve points and observe the cleartext outputs resulting from decryption with the invalid curve points can use this vulnerability to recover the recipient's private key.Oh sure that's a problem I can check for. Thanks for the help.- Don't let the super important claims like expiration be optional. Come on folks, why let people pick and choose like that? It's just gonna cause problems. OpenID Connect went through great lengths to improve the security properties of a JWT. For example, the protocol mandates the use of the

exp,issandaudclaims. To do it right, I need those claims, so don't make them optional.

Quick, what's the right choice?

- HS256 - HMAC using SHA-256 hash algorithm

- HS384 - HMAC using SHA-384 hash algorithm

- HS512 - HMAC using SHA-512 hash algorithm

- ES256 - ECDSA signature algorithm using SHA-256 hash algorithm

- ES256K - ECDSA signature algorithm with secp256k1 curve using SHA-256 hash algorithm

- ES384 - ECDSA signature algorithm using SHA-384 hash algorithm

- ES512 - ECDSA signature algorithm using SHA-512 hash algorithm

- RS256 - RSASSA-PKCS1-v1_5 signature algorithm using SHA-256 hash algorithm

- RS384 - RSASSA-PKCS1-v1_5 signature algorithm using SHA-384 hash algorithm

- RS512 - RSASSA-PKCS1-v1_5 signature algorithm using SHA-512 hash algorithm

- PS256 - RSASSA-PSS signature using SHA-256 and MGF1 padding with SHA-256

- PS384 - RSASSA-PSS signature using SHA-384 and MGF1 padding with SHA-384

- PS512 - RSASSA-PSS signature using SHA-512 and MGF1 padding with SHA-512

- EdDSA - Both Ed25519 signature using SHA-512 and Ed448 signature using SHA-3 are supported. Ed25519 and Ed448 provide 128-bit and 224-bit security respectively.

You are holding it wrong. Don't tell me to issue and use x509 certificates. Trying that for micro-services cut years off my life.

But have you tried XML DSIG?

I need to both give something to the user that I can verify that tells me what they're supposed to be able to do and I need some way of having services pass the auth back and forth. So many places have adopted JWTs because JSON = easy to handle. If there is a right (or wrong) algorithm, guide me there. It is fine to say "this is now depreciated". That's a totally normal thing to tell developers and it happens all the time. But please help us all do the right thing.

Login

Alright I am making a very basic application. It will provide many useful features for users around the world. I just need them to be able to log into the thing. I guess username and password right? I want users to have a nice, understood experience.

No you stupid idiot passwords are fundamentally broken

Well you decide to try anyway. You find this helpful cheat sheet.

- Use Argon2id with a minimum configuration of 19 MiB of memory, an iteration count of 2, and 1 degree of parallelism.

- If Argon2id is not available, use scrypt with a minimum CPU/memory cost parameter of (2^17), a minimum block size of 8 (1024 bytes), and a parallelization parameter of 1.

- For legacy systems using bcrypt, use a work factor of 10 or more and with a password limit of 72 bytes.

- If FIPS-140 compliance is required, use PBKDF2 with a work factor of 600,000 or more and set with an internal hash function of HMAC-SHA-256.

- Consider using a pepper to provide additional defense in depth (though alone, it provides no additional secure characteristics).

None of these mean anything to you but that's fine. It looks pretty straightforward at first.

>>> from argon2 import PasswordHasher

>>> ph = PasswordHasher()

>>> hash = ph.hash("correct horse battery staple")

>>> hash # doctest: +SKIP

'$argon2id$v=19$m=65536,t=3,p=4$MIIRqgvgQbgj220jfp0MPA$YfwJSVjtjSU0zzV/P3S9nnQ/USre2wvJMjfCIjrTQbg'

>>> ph.verify(hash, "correct horse battery staple")

True

>>> ph.check_needs_rehash(hash)

False

>>> ph.verify(hash, "Tr0ub4dor&3")

Traceback (most recent call last):

...

argon2.exceptions.VerifyMismatchError: The password does not match the supplied hash

Got it. But then you see this.

Rather than a simple work factor like other algorithms, Argon2id has three different parameters that can be configured. Argon2id should use one of the following configuration settings as a base minimum which includes the minimum memory size (m), the minimum number of iterations (t) and the degree of parallelism (p).

m=47104 (46 MiB), t=1, p=1 (Do not use with Argon2i)

m=19456 (19 MiB), t=2, p=1 (Do not use with Argon2i)

m=12288 (12 MiB), t=3, p=1

m=9216 (9 MiB), t=4, p=1

m=7168 (7 MiB), t=5, p=1

What the fuck does that mean. Do I want more memory and fewer iterations? That doesn't sound right. Then you end up here: https://www.rfc-editor.org/rfc/rfc9106.html which says I should be using argon2.profiles.RFC_9106_HIGH_MEMORY. Ok but it warns me that it requires 2 GiB, which seems like a lot? How does that scale with a lot of users? Does it change? Should I do low_memory?

Alright I'm sufficiently scared off. I'll use something else.

I've heard about passkeys and they seem easy enough. I'll do that.

Alright well that's ok. I got....most of the big ones.

If you have Windows 10 or up, you can use passkeys. To store passkeys, you must set up Windows Hello. Windows Hello doesn’t currently support synchronization or backup, so passkeys are only saved to your computer. If your computer is lost or the operating system is reinstalled, you can’t recover your passkeys.Nevermind I can't use passkeys. Good to know.

Well if you put the passkeys in 1password then it works

Great so passkeys cost $5 a month per user and they get to pay for the priviledge of using my site. Sounds totally workable.

OpenID Connect/OAuth

Ok so first I need to figure out what kind of this thing I need. I'll just read through all the initial information I need to make this decision.

- Proof Key for Code Exchange by OAuth Public Clients

- OAuth 2.0 Threat Model and Security Considerations

- OAuth 2.0 Security Best Current Practice

- JSON Web Token (JWT) Profile for OAuth 2.0 Access Tokens

- OAuth 2.0 for Native Apps

- OAuth 2.0 for Browser-Based Apps

- OAuth 2.0 Device Authorization Grant

- OAuth 2.0 Authorization Server Metadata

- OAuth 2.0 Dynamic Client Registration Protocol

- OAuth 2.0 Dynamic Client Registration Management Protocol

- OAuth 2.0 Pushed Authorization Requests

- OAuth 2.0 Mutual-TLS Client Authentication and Certificate-Bound Access Tokens

- The OAuth 2.0 Authorization Framework: JWT-Secured Authorization Request (JAR)

- Assertion Framework for OAuth 2.0 Client Authentication and Authorization Grants

- JSON Web Token (JWT) Profile for OAuth 2.0 Client Authentication and Authorization Grants

- Security Assertion Markup Language (SAML) 2.0 Profile for OAuth 2.0 Client Authentication and Authorization Grants

- The OAuth 2.0 Authorization Framework: Bearer Token Usage

- Proof Key for Code Exchange by OAuth Public Clients

- OAuth 2.0 Token Revokation

- OAuth 2.0 Token Introspection

- OpenID Connect Session Management

- OpenID Connect Front-Channel Logout

- OpenID Connect Back-Channel Logout

- OpenID Connect Federation

- OpenID Connect SSE

- OpenID Connect CAEP

Now that I've completed a masters degree in login, it's time for me to begin.

Apple

Sign in with Apple is only supported with paid developer accounts. I don't really wanna pay $100 a year for login.

Facebook/Google/Microsoft

So each one of these requires me to create an account, set up their tokens and embed the button. Not a huge deal, but I can never get rid of any of these and if one was to get deactivated, it would be a problem. See when Login with Twitter stopped being a thing people could use. Plus with Google and Microsoft they also offer email services, so presumably a lot of people will be using their email address, then I've gotta create a flow on the backend where I can associate the same user with multiple email addresses. Fine, no big deal.

I'm also loading Javascript from these companies on my page and telling them who my customers are. This is (of course) necessary, but seems overkill for the problem I'm trying to solve. I need to know that the user is who they say they are, but I don't need to know what the user can do inside of their Google account.

I don't really want this data

Here's the default data I get with Login with Facebook after the user goes through a scary authorization page.

idfirst_namelast_namemiddle_namenamename_formatpictureshort_nameemail

I don't need that. Same with Google

BasicProfile.getId()BasicProfile.getName()BasicProfile.getGivenName()BasicProfile.getFamilyName()BasicProfile.getImageUrl()BasicProfile.getEmail()

I'm not trying to say this is bad. These are great tools and I think the Google one especially is well made. I just don't want to prompt users to give me access to data if I don't want the data and I especially don't want the data if I have no idea if its the data you intended to give me. Who hasn't hit the "Login with Facebook" button and wondered "what email is this company going to send to". My Microsoft account is back from when I bought an Xbox OG. I have no idea where it sends messages now.

Fine, Magic Links

I don't know how to hash stuff correctly in such a way that I am confident I won't mess it up. Passkeys don't work yet. I can use OpenID Connect but really it is overkill for this use case since I don't want to operate as the user on the third-party and I don't want access to all the users information since I intend to ask them how they want me to contact them. The remaining option is "magic links".

How do we set up magic links securely?

- Short lifespan for the password. The one-time password issued will be valid for 5 minutes before it expires

- The user's email is specified alongside login tokens to stop URLs being brute-forced

- Each login token will be at least 20 digits

- The initial request and its response must take place from the same IP address

- The initial request and its response must take place in the same browser

- Each one-time link can only be used once

- Only the last one-time link issued will be accepted. Once the latest one is issued, any others are invalidated.

The fundamental problem here is that email isn't a reliable system of delivery. It's a best-effort system. So if something goes wrong, takes a long time, etc, there isn't much I can really do to troubleshoot that. My advice to the user would be like "I guess you need to try a different email address".

So in order to do this for actual normal people to use, I have to turn off a lot of those security settings. I can't guarantee people don't sign up on their phones and then go to their laptops (so no IP address or browser check). I can't guarantee when they'll get the email (so no 5 minute check). I also don't know the order in which they're gonna get these emails, so it will be super frustrating for people if I send them 3 emails and the second one is actually the most "recent".

I also have no idea how secure this email account is. Effectively I'm just punting on security because it is hard and saying "well this is your problem now".

I could go on and on and on and on

I could write 20,000 words on this topic and still not be at the end. The word miserable barely does justice to how badly this stuff is designed for people to use. Complexity is an unavoidable side effect of flexibility in software. If your thing can do many things, it is harder to use.

We rely on expertise as a species to assist us with areas outside of our normal functions. I don't know anything about medicine, I go to a doctor. I have no idea how one drives a semi truck or flies a plane or digs a mine. Our ability to let people specialize is a key component to our ability to advance. So it is not reasonable to say "if you do anything with security at all you must become an expert in security".

Part of that is you need to use your skill and intelligence to push me along the right path. Don't say "this is the most recommended and this is less recommended and this one is third recommended". Show me what you want people to build and I bet most teams will jump at the chance to say "oh thank God, I can copy and paste a good example".

Corrections/notes/"I think you are stupid": https://c.im/@matdevdug