A climate change what-if

Over a few beers...

Growing up, I was obsessed with space. Like many tech workers, I consumed everything I could find about space and space travel. Young me was convinced we were on the verge of space travel, imagining I would live to see colonies on Mars and beyond. The first serious issue I encountered with my dreams of teleporters and warp travel was the disappointing results of the SETI program. Where was everyone?

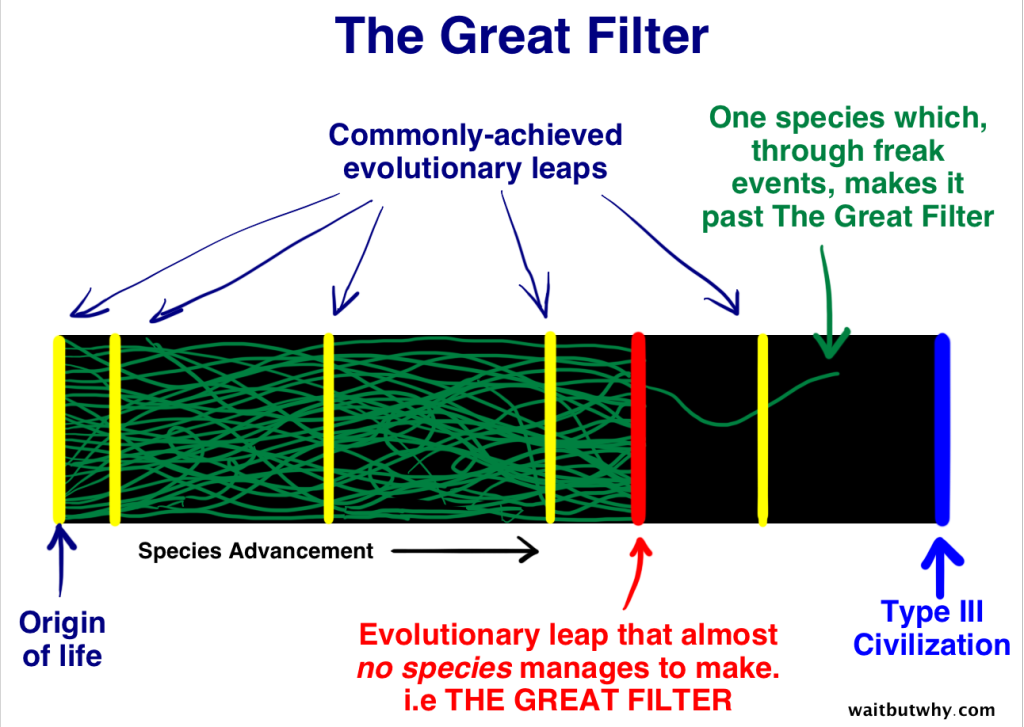

In college I was introduced to the Great Filter. Basically a bucket of cold water was dumped on my obsession with the Drake equation as a kid.

When I heard about global warming, it felt like we had plenty of time. It was the 90s, anything was possible, we still had time to get ahead of this. Certainly this wouldn't be the thing that did our global civilization in. We had so much warning! Fusion reactors were 5-7 years away according to my Newsweek for kids in 1999. This all changed when I met someone who worked on wind farm design in Chicago who had spent years studying climate change.

She broke it down in a way I'll never forget. "People talk about global warming in terms of how it makes them feel. They'll discuss being nervous or unsure about it. We should talk about it like we talk about nuclear weapons. The ICBM is on the launch pad, it's fueled and going to take off. We probably can't stop it at this point, so we need to start planning for what life looks like afterwards."

The more I read about global warming, the more the scope of what we were facing sunk in. Everything was going to change, religion, politics, economics and even the kinds of food we eat. The one that stuck with me was saffron for some odd reason. The idea that my daughter might not get to ever try saffron was so strange. It exists, I can see it and buy it and describe what it tastes like. How could it and so many other things just disappear? Source

To be clear, I understand why people aren't asking the hard questions. There is no way we can take the steps to start addressing the planet burning up and the loss of so much plant and animal life without impacting the other parts of our lives. You cannot consume your way out of this problem, we have to accept immense hits to the global economy and the resulting poverty, suffering and impact. The scope of the change required is staggering. We've never done something like this before.

However let us take a step back. I don't want to focus on every element of this problem. What about just programming? What does this field, along with the infrastructure and community around it, look like in a world being transformed as dramatically as when electric light was introduced?

In the park a few nights ago (drinking in public is legal in Denmark), two fellow tech workers and I discussed just that. Our backgrounds are different enough that I thought the conversation would be interesting to the community at large and might spark some good discussion. It was a Danish person, me an American and a Hungarian. We ended up debating for hours, which suggests to me that maybe folks online would end up doing the same.

The year is 2050

The date we attempted to target for these conversations is 2050. While we all acknowledged that it was possible some sort of magical new technology comes along which solves our problems, it felt extremely unlikely. With China and India not even starting the process of coal draw-down yet it doesn't appear we'll be able to avoid the scenario being outlined. The ICBM is going to launch, as it were.

Language Choice

We started with a basic question. "What languages are people using in 2050 to do their jobs". Some came pretty quickly, with all of us agreeing that C, C#, PHP were likely to survive in 2050. There are too many systems out that rely on those languages and we all felt that wide-scale replacement of existing systems in 2050 was likely to slow or possibly stop. In a world dealing with food insecurity and constant power issues, the eeappetite to swap existing working systems will, we suspect, be low.

These graphs and others like it suggest a very different workflow from today. Due to heat and power issues, it is likely that disruptions to home and office internet will be a much more common occurrence. As flooding and sea level rise disrupts commuting, working asynchronously is going to be the new norm. So the priorities in 2050 we think might look like this:

- dependency management has to be easy and ideally not something you need to touch a lot, with caching similar to pip.

- reproducibility without the use of bandwidth heavy technology like containers will be a high priority as we shift away from remote testing and go back to working on primarily local machines. You could also just be more careful about container size and reusing layers between services.

- test coverage will be more important than ever as it becomes less likely that you will be able to simply ping someone to ask them a question about what they meant or just general questions about functionality. TDD will likely be the only way the person deploying the software will know whether it is ready to go.

Winners

Based on this I felt like some of the big winners in 2050 will be Rust, Clojure and Go. They mostly nail these criteria and are well-designed for not relying on containers or other similar technology for basic testing and feature implementation. They are also (reasonably) tolerant of spotty internet presuming the environment has been set up ahead of time. Typescript also seemed like a clear winner both based on the current enthusiasm and the additional capability it adds to JavaScript development.

There was a bit of disagreement here around whether languages like Java would thrive or decrease. We went back and forth, pointing to its stability and well-understood running properties. I felt like, in a world where servers wouldn't be endlessly getting faster and you couldn't just throw more resources at a problem, Clojure or something like it would be a better horse. We did agree that languages with lots of constantly updated packages like Node with NPM would be a real logistical struggle.

This all applies to new applications, which we all agreed probably won't be what most people are working on. Given a world flooded with software, it will consume a lot of the existing talent out there just to keep the existing stuff working. I suspect folks won't have "one or two" languages, but will work on wherever the contracts are.

Financial Support

I think we're going to see the field of actively maintained programming languages drop. If we're all struggling to pay our rent and fill our cabinets with food, we aren't going to have a ton of time to donate to extremely demanding after-hour volunteer jobs, which is effectively what maintaining most programming languages is. Critical languages or languages which give certain companies advantages will likely have actual employees allocated for their maintenance, while others will languish.

While the above isn't the most scientific study, it does suggest that the companies who are "good" OSS citizens will be able to greatly steer the direction of which languages succeed or fail. While I'm not suggesting anyone is going to lock these languages down, their employees will be the ones actually doing the work and will be able to make substantive decisions about their future.

Infrastructure

In a 2016 paper in Global Environmental Change, Becker and four colleagues concluded that raising 221 of the world’s most active seaports by 2 meters (6.5 feet) would require 436 million cubic meters of construction materials, an amount large enough to create global shortages of some commodities. The estimated amount of cement — 49 million metric tons — alone would cost $60 billion in 2022 dollars. Another study that Becker co-authored in 2017 found that elevating the infrastructure of the 100 biggest U.S. seaports by 2 meters would cost $57 billion to $78 billion in 2012 dollars (equivalent to $69 billion to $103 billion in current dollars), and would require “704 million cubic meters of dredged fill … four times more than all material dredged by the Army Corps of Engineers in 2012.”

“We’re a rich country,” Becker said, “and we’re not going to have nearly enough resources to make all the required investments. So among ports there’s going to be winners and losers. I don’t know that we’re well-equipped for that.”

Running a datacenter requires a lot of parts. You need replacement parts for a variety of machines from different manufacturers and eras, the ability to get new parts quickly and a lot of wires, connectors and adapters. As the world is rocked by a new natural disaster every day and seaports around the world become unusable, it will simply be impossible to run a datacenter without a considerable logistics arm. It will not be possible to call up a supplier and have a new part delivered in hours or even days.

It isn't just the servers. The availability of data centers depends on reliability and maintainability of many sub-systems (power supply, HVAC, safety systems, communications, etc). We currently live in a world where many cloud services have SLAs with numbers like 99.999% availability along with heavy penalties for failing to reach the promised availability. For the datacenters themselves, we have standards like the TIA-942 link and GR-3160 link which determine what a correctly run datacenter looks like and how we define reliable.

COVID-related factory shutdowns in Vietnam and other key production hubs made it difficult to acquire fans, circuit breakers and IGBTs (semiconductors for power switching), according to Johnson. In some cases, the best option was to look outside of the usual network of suppliers and be open to paying higher prices.

“We’ve experienced some commodity and freight inflation,” said Johnson. “We made spot buys, so that we can meet the delivery commitments we’ve made to our customers, and we have implemented strategies to recapture those costs.”

But there are limits, said Johnson.

“The market is tight for everybody,” said Johnson. “There’s been instances where somebody had to have something, and maybe a competitor willing to jump the line and knock another customer out. We’ve been very honorable with what we’ve told our customers, and we’ve not played that game.” Source

We already know what kills datacenters now and the list doesn't look promising for the world of 2050.

A recent report (Ponemon Institute 2013) states the top root causes of data center failures: UPS system failure, Accidental/human error, cybercrime, weather related, water heat or CRAC failure, generator failure and IT equipment failure.

In order to keep a service up and operational you are going to need to keep more parts in stock, standardize models of machines and expect to deal with a lot more power outages. This will cause additional strain on the diesel generator backup systems, which have 14.09 failures per million hours (IEEE std. 493 Gold Book) and average repair time of 1257 hours now. In a world where parts take weeks or months to come, these repair numbers might totally change.

So I would expect the cloud to be the only realistic way to run anything, but I would also anticipate that just keeping the basic cloud services up and running to be a massive effort from whoever owns the datacenters. Networking is going to be a real struggle as floods, fires and power outages make the internet more brittle than it has been since its modern adoption. Prices will be higher as the cost for the providers will be higher.

Expectations

- You will need to be multi-AZ and expect to cut over often.

- Prices will be insane compared to now

- Expect CPU power, memory, storage, etc to mostly stay static or drop over time as the focus becomes just keeping the lights on as it were

- Downtime will become the new normal. We simply aren't going to be able to weather this level of global disruption with anything close to the level of average performance and uptime we have now.

- Costs for the uptime we take for granted now won't be a little higher, they'll be exponentially higher even adjusted for inflation. I suspect contractual uptime will go from being "mostly an afterthought" to a extremely expensive conversation taking place at the highest levels between the customer and supplier.

- Out of the box distributed services like Lambdas, Fargate, Lightsail, App Engine and the like will be the default since then the cloud provider is managing what servers are up and running.

For a long time we treated uptime as a free benefit of modern infrastructure. I suspect soon the conversation around uptime will be very different as it becomes much more difficult to reliably staff employees around the world to get online and fix a problem within minutes.

Mobile

By 2050, under an RCP 8.5 scenario, the number of people living in areas with a nonzero chance of lethal heat waves would rise from zero today to between 700 million and 1.2 billion (not factoring in air conditioner penetration). Urban areas in India and Pakistan may be the first places in the world to experience such lethal heatwaves (Exhibit 6). For the people living in these regions, the average annual likelihood of experiencing such a heat wave is projected to rise to 14 percent by 2050. The average share of effective annual outdoor working hours lost due to extreme heat in exposed regions globally could increase from 10 percent today to 10 to 15 percent by 2030 and 15 to 20 percent by 2050.

We are going to live in a world where the current refugee crises is completely eclipsed. Hundreds of millions of people are going to be roving around the world trying to escape extreme weather. For many of those people, we suspect mobile devices will be the only way they can interact with government, social services, family and friends. Mobile networks will be under extreme strain as even well-stocked telecoms will struggle with floods of users with people attempting to escape the heat and weather disasters.

Mobile telecommunications, already essential to life in the smartphone era, is going to be as critical as power or water. Not just for users, but for the usage of the various embedding of ICT devices into the world around us. 5G has already greatly increased our ability to service many users, with some of that work happening in the transition from FDD to TDD (explanation). So in many ways we're already getting ready for a much higher level of dependence on mobile networks through the migration to 5G. The breaking of the service into "network slices" allows for effective abstraction away from the physical hardware, but obviously doesn't eliminate the physical element.

Mobile in 2050 Predictions

- Phones are going to be in service for a lot longer. We all felt that both Android, with their aggressive backwards compatibility and Apple with the long support for software updates were already laying the groundwork for this.

- Even among users who have the financial means to buy a new phone, it might be difficult for companies to manage their logistics chain. Expect long waits for new devices unless there is domestic production of the phone in question.

- The trick to hardware launches and maintenance is going to be securing multiple independent supply chains and less about innovation

- Mobile will be the only computing people have access to and they'll need to be more aware of data pricing. There's no reason to think telecoms won't take advantage of their position to increase data prices under the guise of maintenance for the towers.

Products like smartphones possess hundreds of components whose raw materials are transported from all over the world; the cumulative mileage traveled by all those parts would “probably reach to the moon,” Mims said. These supply chains are so complicated and opaque that smartphone manufacturers don’t even know the identity of all their suppliers — getting all of them to adapt to climate change would mark a colossal achievement. Yet each node is a point of vulnerability whose breakdown could send damaging ripples up and down the chain and beyond it. source

Developers will be expected to make applications that can go longer between updates, can tolerate network disruptions better and in general are less dependent on server-side components. Games and entertainment will be more important as people attempt to escape this incredibly difficult century of human life. Mobile platforms will likely have backdoors in place forced in by various governments and any concept of truly encrypted communication seem unlikely.

It is my belief (not the belief of the group) that this will strongly encourage asyncronous communication. Email seems like the perfect tool to me for writing responses and sending attachments in a world with shaky internet. It also isn't encrypted and can be run on very old hardware for a long time. The other folks felt like this was a bit delusional and that all messaging would be happening inside of siloed applications. It would be easier to add offline sync to already popular applications.

Work

Hey, Kiana,

Just wanted to follow up on my note from a few days ago in case it got buried under all of those e-mails about the flood. I’m concerned about how the Eastern Seaboard being swallowed by the Atlantic Ocean is going to affect our Q4 numbers, so I’d like to get your eyes on the latest earnings figures ASAP. On the bright side, the Asheville branch is just a five-minute drive from the beach now, so the all-hands meeting should be a lot more fun this year. Silver linings!

Regards,

Kevin

Offices in 2050 for programmers will have been a thing of the past for awhile. It isn't going to make sense to force people to all commute to a single location, not out of a love of the employees but as a way to decentralize the businesses risk from fires, floods and famines. Programmers, like everyone, will be focused on ensuring their families have enough to eat and somewhere safe to sleep.

Open Source Survival?

Every job I've ever had relies on a large collection of Open Source software, whose survival depends on a small number of programmers having the available free time to maintain and add features to them. We all worried about how, in the face of reduced free time and increased economic pressures, who was going to be able to put in the many hours of unpaid labor to maintain this absolutely essential software. This is true for Linux, programming languages, etc.

To be clear this isn't a problem just for 2050, it is a problem today. In a world where programmers need to work harder just to provide for their families and are facing the same intense pressure as everyone else, it seems unreasonable to imagine. My hope is that we figure out a way to easily financially support OSS developers, but I suspect it won't be a top priority.

Stability

We're all worried about what happens to programming as a realistic career when we hit an actual economic collapse, not just a recession. Like stated above, it seems like a reasonable prediction that just keeping the massive amount of software in the world running will employ a fair amount of us. This isn't just a programming issue, but I would expect full-time employment roles to be few and far between. There will be a lot of bullshit contracting and temp gigs.

The group felt that automation would have likely gotten rid of most normal jobs by 2050 and we would be looking at a world where wage labor wasn't the norm anymore. I think that's optimistic and speaks to a lack of understanding of how flexible a human worker is. You can pull someone aside and effectively retrain them for a lot of tasks in less than 100 hours. Robots cannot do that and require a ton of expensive to change their position or function even slightly.

Daily Work

Expect to see a lot more work that involves less minute to minute communication with other developers. Open up a PR, discuss the PR, show the test results and then merge in the branch without requiring teams to share a time zone. Full-time employees will serve mostly as reviewers, ensuring the work they are receiving is up to spec and meets standards. Corporate loyalty and perks will be gone, you'll just swap freelance gigs whenever you need to or someone offers more money. It wouldn't surprise me if serious security programs were more common as OSS frameworks and packages go longer and longer without maintenance. If nothing else, it should provide a steady stream of work.

AI?

I don't think it's gonna happen. Maybe after this version of civilization burns to the ground and we make another one.

Conclusion

Ideally this sparks some good conversations. I don't have any magic solutions here, but I hope you don't leave this completely without hope. I believe we will survive and that the human race can use this opportunity to fundamentally redefine our relationship to concepts like "work" and economic opportunity. But blind hope doesn't count for much.

For maintainers looking to future proof their OSS or software platforms, start planning for easy of maintenance and deployment. Start discussing "what happens to our development teams if they can't get reliable internet for a day, a week, a month". It is great to deploy an application 100 times a day, but how long can it sit unattended? How does on-call work?

A whole lot is going to change in every element of life and our little part is no different. The market isn't going to save us, a startup isn't going to fix everything and capture all the carbon.