IP addresses have been in the news a lot lately and not for good reasons. AWS has announced they are charging $.005 per IPv4 address per hour, joining other cloud providers in charging for the luxury of a public IPv4 address. GCP charges $.004, same with Azure and Hetzner charges €0.001/h. Clearly the era of cloud providers going out and purchasing more IPv4 space is coming to an end. As time goes on, the addresses are just more valuable and it makes less sense to give them out for free.

So the writing is on the wall. We need to switch to IPv6. Now I was first told that we were going to need to switch to IPv6 when I was in high school in my first Cisco class and I'm 36 now, to give you some perspective on how long this has been "coming down the pipe". Up to this point I haven't done much at all with IPv6, there has been almost no market demand for those skills and I've never had a job where anybody seemed all that interested in doing it. So I skipped learning about it, which is a shame because it's actually a great advancement in networking.

Now is the second best time to learn though, so I decided to migrate this blog to IPv6 only. We'll stick it behind a CDN to handle the IPv4 traffic, but let's join the cool kids club. What I found was horrifying: almost nothing works out of the box. Major dependencies cease functioning right away and workarounds cannot be described as production ready. The migration process for teams to IPv6 is going to be very rocky, mostly because almost nobody has done the work. We all skipped it for years and now we'll need to pay the price.

Why is IPv6 worth the work?

I'm not gonna do a thing about what is IPv4 vs IPv6. There are plenty of great articles on the internet about that. Let's just quickly recap though "why would anyone want to make the jump to IPv6".

- Address space (obviously)

- Smaller number of header fields (8 vs 13 on v4)

- Faster processing: No more checksum, so routers don't have to do a recalculation for every packet.

- Faster routing: More summary routes and hierarchical routes. (Don't know what that is? No stress. Summary route = combining multiple IPs so you don't need all the addresses, just the general direction based on the first part of the address. Ditto with routes, since IPv6 is globally unique you can have small and efficient backbone routing.)

- QoS: Traffic Class and Flow Label fields make QoS easier.

- Auto-addressing. This allows IPv6 hosts on a LAN to connect without a router or DHCP server.

- You can add IPsec to IPv6 with the Authentication Header and Encapsulating Security Payload.

Finally the biggest one: because IPv6 addresses are free and IPv4 ones are not.

Setting up an IPv6-Only Server

The actual setup process was simple. I provisioned a Debian box and selected "IPv6". Then I got my first surprise. My box didn't get an IPv6 address. I was given a /64 of addresses, which is 18,446,744,073,709,551,616. It is good to know that my small ARM server could scale to run all the network infrastructure for every company I've ever worked for on all public addresses.

Now this sounds wasteful but when you look at how IPv6 works, it really isn't. Since IPv6 is much less "chatty" than IPv4, even if I had 10,000 hosts on this network it doesn't matter. As discussed here it actually makes sense to keep all the IPv6 space, even if at first it comes across as insanely wasteful. So just don't think about how many addresses are getting sent to each device.

Important: resist the urge to optimize address utilization. Talking to more experienced networking folks, this seems to be a common trap people fall into. We've all spent so much time worrying about how much space we have remaining in an IPv4 block and designing around that problem. That issue doesn't exist anymore. A /64 prefix is the smallest you should configure on an interface.

Attempting to stick a smaller prefix, which is something I've heard people try, like a /68 or a /96 can break stateless address auto-configuration. Your mentality should be a /48 per site. That's what the Regional Internet Registries hands out when allocating IPv6. When thinking about network organization, you need to think about the nibble boundary. (I know, it sounds like I'm making shit up now). It's basically a way to make IPv6 easier to read.

Let's say you have 2402:9400:10::/48. You would divide it up as follows if you wanted only /64 for each box as a flat network.

| Subnet # | Subnet Address |

|---|---|

| 0 | 2402:9400:10::/64 |

| 1 | 2402:9400:10:1::/64 |

| 2 | 2402:9400:10:2::/64 |

| 3 | 2402:9400:10:3::/64 |

| 4 | 2402:9400:10:4::/64 |

| 5 | 2402:9400:10:5::/64 |

A /52 works a similar way.

| Subnet # | Subnet Address |

|---|---|

| 0 | 2402:9400:10::/52 |

| 1 | 2402:9400:10:1000::/52 |

| 2 | 2402:9400:10:2000::/52 |

| 3 | 2402:9400:10:3000::/52 |

| 4 | 2402:9400:10:4000::/52 |

| 5 | 2402:9400:10:5000::/52 |

You can still at a glance know which subnet you are looking at.

Alright I've got my box ready to go. Let's try to set it up like a normal server.

Problem 1 - I can't SSH in

This was a predictable problem. Neither my work or home ISP supports IPv6. So it's great that I have this box set up, but now I can't really do anything with it. Fine, I attach an IPv4 address for now, SSH in and I'll set up cloudflared to run a tunnel. Presumably they'll handle the conversion on their side.

Except that isn't how Cloudflare rolls. Imagine my surprise when the tunnel collapses when I remove the IPv4 address. By default the cloudflared utility assumes IPv4 and you need to go in and edit the systemd service file to add: --edge-ip-version 6. After this, the tunnel is up and I'm able to SSH in.

Problem 2 - I can't use GitHub

Alright so I'm on the box. Now it's time to start setting up stuff. I run my server setup script and it immediately fails. It's trying to access the installation script for hishtory, a great shell history utility I use on all my personal stuff. It's trying to pull the install file from GitHub and failing. "Certainly that can't be right. GitHub must support IPv6?"

Nope. Alright fine, seems REALLY bad that the service the entire internet uses to release software doesn't work with IPv6, but you know Microsoft is broke and also only cares about fake AI now, so whatever. I ended up using the TransIP Github Proxy which worked fine. Now I have access to Github. But then Python fails with urllib.error.URLError: <urlopen error [Errno 101] Network is unreachable>. Alright I give up on this. My guess is the version of Python 3 in Debian doesn't like IPv6, but I'm not in the mood to troubleshoot it right now.

Problem 3 - Can't set up Datadog

Let's do something more basic. Certainly I can set up Datadog to keep an eye on this box. I don't need a lot of metrics, just a few historical load numbers. Go to Datadog, log in and start to walk through the process. Immediately collapses. The simple setup has you run curl -L https://s3.amazonaws.com/dd-agent/scripts/install_script_agent7.sh. Now S3 supports IPv6, so what the fuck?

curl -v https://s3.amazonaws.com/dd-agent/scripts/install_script_agent7.sh

* Trying [64:ff9b::34d9:8430]:443...

* Trying 52.216.133.245:443...

* Immediate connect fail for 52.216.133.245: Network is unreachable

* Trying 54.231.138.48:443...

* Immediate connect fail for 54.231.138.48: Network is unreachable

* Trying 52.217.96.222:443...

* Immediate connect fail for 52.217.96.222: Network is unreachable

* Trying 52.216.152.62:443...

* Immediate connect fail for 52.216.152.62: Network is unreachable

* Trying 54.231.229.16:443...

* Immediate connect fail for 54.231.229.16: Network is unreachable

* Trying 52.216.210.200:443...

* Immediate connect fail for 52.216.210.200: Network is unreachable

* Trying 52.217.89.94:443...

* Immediate connect fail for 52.217.89.94: Network is unreachable

* Trying 52.216.205.173:443...

* Immediate connect fail for 52.216.205.173: Network is unreachableIt's not S3 or the box, because I can connect to the test S3 bucket AWS provides just fine.

curl -v http://s3.dualstack.us-west-2.amazonaws.com/

* Trying [2600:1fa0:40bf:a809:345c:d3f8::]:80...

* Connected to s3.dualstack.us-west-2.amazonaws.com (2600:1fa0:40bf:a809:345c:d3f8::) port 80 (#0)

> GET / HTTP/1.1

> Host: s3.dualstack.us-west-2.amazonaws.com

> User-Agent: curl/7.88.1

> Accept: */*

>

< HTTP/1.1 307 Temporary Redirect

< x-amz-id-2: r1WAG/NYpaggrPl3Oja4SG1CrcBZ+1RIpYKivAiIhiICtfwiItTgLfm6McPXXJpKWeM848YWvOQ=

< x-amz-request-id: BPCVA8T6SZMTB3EF

< Date: Tue, 01 Aug 2023 10:31:27 GMT

< Location: https://aws.amazon.com/s3/

< Server: AmazonS3

< Content-Length: 0

<

* Connection #0 to host s3.dualstack.us-west-2.amazonaws.com left intactFine I'll do it the manual way through apt.

0% [Connecting to apt.datadoghq.com (18.66.192.22)]

Goddamnit. Alright Datadog is out. It's at this point I realize the experiment of trying to go IPv6 only isn't going to work. Almost nothing seems to work right without proxies and hacks. I'll try to stick as much as I can on IPv6 but going exclusive isn't an option at this point.

NAT64

So in order to access IPv4 resources from IPv6 you need to go through a NAT64 service. I ended up using this one: https://nat64.net/. Immediately all my problems stopped and I was able to access resources normally. I am a little nervous about relying exclusively on what appears to be a hobby project for accessing critical internet resources, but since nobody seems to care upstream of me about IPv6 I don't think I have a lot of choice.

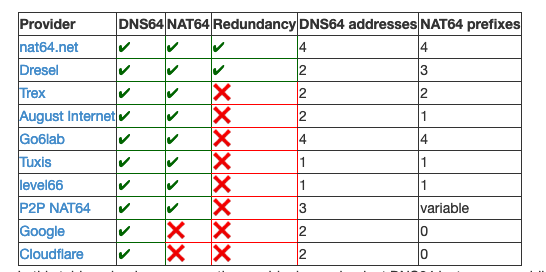

I am surprised there aren't more of these. This is the best list I was able to find:

Most of them seem to be gone now. Dresel's link doesn't work, Trex in my testing had problems, August Internet is gone, most of the Go6lab test devices are down, Tuxis worked but they launched the service in 2019 and seem to have no further interaction with it. Basically Kasper Dupont seems to be the only person on the internet with any sort of widespread interest in allowing IPv6 to actually work. Props to you Kasper.

Basically one person props up this entire part of the internet.

Kasper Dupont

So I was curious about Kasper and emailed him to ask a few questions. You can see that back and forth below.

Me: I found the Public NAT64 service super useful in the transition but would love to know a little bit more about why you do it.

Kasper: I do it primarily because I want to push IPv6 forward. For a few years

I had the opportunity to have a native IPv6-only network at home with

DNS64+NAT64, and I found that to be a pleasant experience which I

wanted to give more people a chance to experience.

When I brought up the first NAT64 gateway it was just a proof of

concept of a NAT64 extension I wanted to push. The NAT64 service took

off, the extension - not so much.

A few months ago I finally got native IPv6 at my current home, so now

I can use my own service in a fashion which much more resembles how my

target users would use it.

Me: You seem to be one of the few remaining free public services like this on the internet and would love to know a bit more about what motivated you to do it, how much it costs to run, anything you would feel comfortable sharing.

Kasper: For my personal products I have a total of 7 VMs across different

hosting providers. Some of them I purchase from Hetzner at 4.51 Euro

per month: https://hetzner.cloud/?ref=fFum6YUDlpJz

The other VMs are a bit more expensive, but not a lot.

Out of those VMs the 4 are used for the NAT64 service and the others

are used for other IPv6 transition related services. For example I

also run this service on a single VM: http://v4-frontend.netiter.com/

I hope to eventually make arrangements with transit providers which

will allow me to grow the capacity of the service and make it

profitable such that I can work on IPv6 full time rather than as a

side gig. The ideal outcome of that would be that IPv4-only content

providers pay the cost through their transit bandwidth payments.

Me: Any technical details you would like to mention would also be great

Kasper: That's my kind of audience :-)

I can get really really technical.

I think what primarily sets my service aside from other services is

that each of my DNS64 servers is automatically updated with NAT64

prefixes based on health checks of all the gateways. That means the

outage of any single NAT64 gateway will be mostly invisible to users.

This also helps with maintenance. I think that makes my NAT64 service

the one with the highest availability among the public NAT64 services.

The NAT64 code is developed entirely by myself and currently runs as a

user mode daemon on Linux. I am considering porting the most

performance critical part to a kernel module.

This site

Alright so I got the basics up and running. In order to pull docker containers over IPv6 you need to add: registry.ipv6.docker.com/library/ to the front of the image name. So for instance: image: mysql:8.0 becomes image: registry.ipv6.docker.com/library/mysql:8.0

Docker warns you this setup isn't production ready. I'm not really sure what that means for Docker. Presumably if it were to stop you should be able to just pull normally?

Once that was done, we set up the site as an AAAA DNS record and allowed Cloudflare to proxy, meaning they handle the advertisement of IPv6 and bring the traffic to me. One thing I did modify from before was previously I was using Caddy webserver but since I now have a hard reliance on Cloudflare for most of my traffic, I switched to Nginx. One nice thing you can do now that you know all traffic is coming from Cloudflare is switch how SSL works.

Now I have an Origin Certificate from Cloudflare hard-loaded into Nginx with Authenticated Origin Pulls set up so that I know for sure all traffic is running through Cloudflare. The certificate is signed for 15 years, so I can feel pretty confident sticking it in my secrets management system and not thinking about it ever again. For those that are interested there is a tutorial here on how to do it: https://www.digitalocean.com/community/tutorials/how-to-host-a-website-using-cloudflare-and-nginx-on-ubuntu-22-04

Alright the site is back up and working fine. It's what you are reading right now, so if it's up then the system works.

Unsolved Problems

- My containers still can't communicate with IPv4 resources even though they're on an IPv6 network with an IPv6 bridge. The DNS64 resolution is working, and I've added fixed-cidr-v6 into Docker. I can talk to IPv6 resources just fine, but the NAT64 conversion process doesn't work. I'm going to keep plugging away at it.

- Before you ping me I did add NAT with ip6tables.

- SMTP server problems. I haven't been able to find a commercial SMTP service that has an AAAA record. Mailgun and SES were both duds as were a few of the smaller ones I tried. Even Fastmail didn't have anything that could help me. If you know of one please let me know: https://c.im/@matdevdug

Why not stick with IPv4?

Putting aside "because we're running out of addresses" for a minute. If we had adopted IPv6 earlier, the way we do infrastructure could be radically different. So often companies use technology like load balancers and tunnels not because they actually need anything that these things do, but because they need some sort of logical division between private IP ranges and a public IP address they can stick in an DNS A record.

If you break a load balancer into its basic parts, it is doing two things. It is distributing incoming packets onto the back-end servers and it s checking the health of those servers and taking unhealthy ones out of the rotation. Nowadays they often handle things like SSL termination and metrics, but it's not a requirement to be called a load balancer.

There are many ways to load balance, but the most common are as follows:

- Round-robin of connection requests.

- Weighted Round-Robin with different servers getting more or less.

- Least-Connection with servers that have the fewest connections getting more requests.

- Weighted Least-Connection, same thing but you can tilt it towards certain boxes.

What you notice is there isn't anything there that requires, or really even benefits from a private IP address vs a public IP address. Configuring the hosts to accept traffic from only one source (the load balancer) is pretty simple and relatively cheap to do, computationally speaking. A lot of the infrastructure designs we've been forced into, things like VPCs, NAT gateways, public vs private subnets, all of these things could have been skipped or relied on less.

The other irony is that IP whitelisting, which currently is a broken security practice that is mostly a waste of time as we all use IP addresses owned by cloud providers, would actually be something that mattered. The process for companies to purchase a /44 for themselves would have gotten easier with demand and it would have been more common for people to go and buy a block of IPs from American Registry for Internet Numbers (ARIN), Réseaux IP Européens Network Coordination Centre (RIPE), or Asia-Pacific Network Information Centre (APNIC).

You would never need to think "well is Google going to buy more IP addresses" or "I need to monitor GitHub support page to make sure they don't add more later". You'd have one block they'd use for their entire business until the end of time. Container systems wouldn't need to assign internal IP addresses on each host, it would be trivial to allocate chunks of public IPs for them to use and also advertise over standard public DNS as needed.

Obviously I'm not saying private networks serve no function. My point is a lot of the network design we've adopted isn't based on necessity but on forced design. I suspect we would have ended up designing applications with the knowledge that they sit on the open internet vs relying entirely on the security of a private VPC. Given how security exploits work this probably would have been a benefit to overall security and design.

So even if cost and availability isn't a concern for you, allowing your organization more ownership and control over how your network functions has real measurable value.

Is this gonna get better?

So this sucks. You either pay cloud providers more money or you get a broken internet. My hope is that the folks who don't want to pay push more IPv6 adoption, but it's also a shame that it has taken so long for us to get here. All these problems and issues could have been addressed gradually and instead it's going to be something where people freak out until the teams that own these resources make the required changes.

I'm hopeful the end result might be better. I think at the very least it might open up more opportunities for smaller companies looking to establish themselves permanently with an IP range that they'll own forever, plus as IPv6 gets more mainstream it will (hopefully) get easier for customers to live with. But I have to say right now this is so broken it's kind of amazing.

If you are a small company looking to not pay the extra IP tax, set aside a lot of time to solve a myriad of problems you are going to encounter.

Thoughts/corrections/objections: [email protected]