What if Kubernetes was idiot-proof?

Love/Hate Relationship

AWS and I have spent a frightening amount of time together. In that time I have come to love that weird web UI with bizarre application naming. It's like asking an alien not familiar with humans to name things. Why is Athena named Athena? Nothing else gets a deity name. CloudSearch, CloudFormation, CloudFront, Cloud9, CloudTrail, CloudWatch, CloudHSM, CloudShell are just lazy, we understand you are the cloud. Also Amazon if you are going to overuse a word that I'm going to search, use the second word so the right result comes up faster. All that said, I've come to find comfort in its primary color icons and "mobile phones don't exist" web UI.

Outside of AWS I've also done a fair amount of work with Azure, mostly in Kubernetes or k8s-adjacent spaces. All said I've now worked with Kubernetes on bare metal in a datacenter, in a datacenter with VMs, on raspberry pis in a cluster with k3s, in AWS with EKS, in Azure with AKS, DigitalOcean Kubernetes and finally with GKE in GCP. Me and the Kubernetes help documentation site are old friends at this point, a sea of purple links. I say all this to suggest that I have made virtually every mistake one can with this particular platform.

When being told I was going to be working in GCP (Google Cloud Platform) I was not enthused. I try to stay away from Google products in my personal life. I switched off Gmail for Fastmail, Search for DuckDuckGo, Android for iOS and Chrome for Firefox. It has nothing to do with privacy, I actually feel like I understand how Google uses my personal data pretty well and don't object to it on an ideological level. I'm fine with making an informed decision about using my personal data if the return to me in functionality is high enough.

I mostly move off Google services in my personal life because I don't understand how Google makes decisions. I'm not talking about killing Reader or any of the Google graveyard things. Companies try things and often they don't work out, that's life. It's that I don't even know how fundamental technology is perceived. Is Golang, which relies extensively on Google employees, doing well? Are they happy with it, or is it in danger? Is Flutter close to death or thriving? Do they like Gmail or has it lost favor with whatever executives are in charge of it this month? My inability to get a sense of whether something is doing well or poorly inside of Google makes me nervous about adopting their stack into my life.

I say all this to explain that, even though I was not excited to use GCP and learn a new platform. Even though there are parts of GCP that I find deeply frustrating as compared to its peers...there is a gem here. If you are serious about using Kubernetes, GKE is the best product I've seen on the market. It isn't even close. GKE is so good that if you are all-in on Kubernetes, it's worth considering moving from AWS or Azure.

I know, bold statement.

TL;DR

- GKE is the best managed k8s product I've ever tried. It aggressively helps you do things correctly and is easy to set up and run.

- GKE Autopilot is all of that but they handle all the node/upgrade/security etc. It's like Heroku-levels of easy to get something deployed. If you are a small company who doesn't want to hire or assign someone to manage infrastructure, you could grow forever on GKE Autopilot and still be able to easily migrate to another provider or the datacenter later on.

- The rest of GCP is a bit of a mixed bag. Do your homework.

Disclaimer

I am not and have never been a google employee/contractor/someone they know exists. I once bombed an interview when I was 23 for an job at Google. This interview stands out to me because despite working with it every day for a year my brain just forgot how RAID parity worked on a data tranmission level. Got off the call and instantly all memory of how it worked returned to me. Needless to say nobody at Google cares that I have written this and it is just my opinions.

Corrections are always appreciated. Let me know at: [email protected]

Traditional K8s Setup

One common complaint about k8s is you have to set up everything. Even "hosted" platform often just provide the control plane, meaning almost everything else is some variation of your problem. Here's the typically collection of what you need to make decisions about in no particular order:

- Secrets encryption: yes/no how

- Version of Kubernetes to start on

- What autoscaling technology are you going to use

- Managed/unmanaged nodes

- CSI drivers, do you need them, which ones

- Which CNI, what does it mean to select a CNI, how do they work behind the scenes. This one in particular throws new cluster users because it seems like a nothing decision but it actually has profound impact in how the cluster operates

- Can you provision load balancers from inside of the cluster?

- CoreDNS, do you want it to cache DNS requests?

- Vertical pod autoscaling vs horizontal pod autoscaling

- Monitoring, what collects the stats, what default data do you get, where does it get stored (node-exporter setup to prometheus?)

- Are you gonna use an OIDC? You probably want it, how do you set it up?

- Helm, yes or no?

- How do service accounts work?

- How do you link IAM with the cluster?

- How do you audit the cluster for compliance purposes?

- Is the cluster deployed in the correct resilient way to guard against AZ outages?

- Service mesh, do you have one, how do you install it, how do you manage it?

- What OS is going to run on your nodes?

- How do you test upgrades? What checks to make sure you aren't relying on a removed API? When is the right time to upgrade?

- What is monitoring overall security posture? Do you have known issues with the cluster? What is telling you that?

- Backups! Do you want them? What controls them? Can you test them?

- Cost control. What tells you if you have a massively overprovisioned node group?

This isn't anywhere near all the questions you need to answer, but this is typically where you need to start. One frustration with a lot of k8s services I've tried in the past is they have multiple solutions to every problem and it's unclear which is the recommended path. I don't want to commit to the wrong CNI and then find out later that nobody has used that one in six months and I'm an idiot. (I'm often an idiot but I prefer to be caught for less dumb reasons).

Are these failings of kubernetes?

I don't think so. K8s is everything to every org. You can't make a universal tool that attempts to cover every edge case that doesn't allow for a lot of customization. With customization comes some degree of risk that you'll make the wrong choice. It's the Mac vs Linux laptop debate in an infrastructure sphere. You can get exactly what you need with the Linux box but you need to understand if all the hardware is supported and what tradeoffs each decision involves. With a Mac I'm getting whatever Apple thinks is the correct combination of all of those pieces, for better or worse.

If you can get away with Cloud Run or ECS, don't let me stop you. Pick the level of customization you need for the job, not whatever is hot right now.

Enter GKE

Alright so when I was hired I was tasked with replacing an aging GKE cluster that was coming to end of life running Istio. After running some checks, we weren't using any of the features of Istio, so we decided to go with Linkerd since it's a much easier to maintain service mesh. I sat down and started my process for upgrading an old cluster.

- Check the node OS for upgrades, check the node k8s version

- Confirm API usage to see if we are using outdated APIs

- How do I install and manage the ancillary services and what are they? What installs CoreDNS, service mesh, redis, etc.

- Can I stand up a clean cluster from what I have or was critical stuff added by hand? It never should be but it often is.

- Map out the application dependencies and ensure they're put into place in the right order.

- What controls DNS/load balancing and how can I cut between cluster 1 and cluster 2

It's not a ton of work, but it's also not zero work. It's also a good introduction to how applications work and what dependencies they have. Now my experience with recreating old clusters in k8s has been, to be blunt, a fucking disaster in the past. It typically involves 1% trickle traffic, everything returning 500s, looking at logs, figuring out what is missing, adding it, turning 1% back on, errors everywhere, look at APM, oh that app's healthcheck is wrong, etc.

The process with GKE was so easy I was actually sweating a little bit when I cut over traffic, because I was sure this wasn't going to work. It took longer to map out the application dependencies and figure out the Istio -> Linkerd part than it did to actually recreate the cluster. That's a first and a lot of it has to do with how GKE holds your hand through every step.

How does GKE make your life easier?

Let's walk through my checklist and how GKE solves pretty much all of them.

- Node OS and k8 version on the node.

GCP offers a wide variety of OSes that you can run but recommends one I have never heard of before.

Container-Optimized OS from Google is an operating system image for your Compute Engine VMs that is optimized for running containers. Container-Optimized OS is maintained by Google and based on the open source Chromium OS project. With Container-Optimized OS, you can bring up your containers on Google Cloud Platform quickly, efficiently, and securely.

I'll be honest, my first thought when I saw "server OS based on Chromium" was "someone at Google really needed to get an OKR win". However after using it for a year, I've really come to like it Now it's not a solution for everyone, but if you can operate within the limits its a really nice solution. Here are the limits.

- No package manager. They have something called the CoreOS Toolbox which I've used a few times to debug problems so you can still troubleshoot. Link

- No non-containerized applications

- No install third-party kernel modules or drivers

- It is not supported outside of the GCP environment

I know, it's a bad list. But when I read some of the nice features I decided to make the switch. Here's what you get:

- The root filesystem is always mounted as read-only. Additionally, its checksum is computed at build time and verified by the kernel on each boot.

- Stateless kinda.

/etc/is writable but stateless. So you can write configuration settings but those settings do not persist across reboots. (Certain data, such as users' home directories, logs, and Docker images, persist across reboots, as they are not part of the root filesystem.) - Ton of other security stuff you get for free. Link

I love all this. Google tests the OS internally, they're scanning for CVEs, they're slowly rolling out updates and its designed to just run containers correctly, which is all I need. This OS has been idiot proof. In a year of running it I haven't had a single OS issue. Updates go out, they get patched, I don't notice ever. Troubleshooting works fine. This means I never need to talk about a Linux upgrade ever again AND the limitations of the OS means my applications can't rely on stuff they shouldn't use. Truly set and forget.

I don't run software I can't build from source.

Go nuts: https://cloud.google.com/container-optimized-os/docs/how-to/building-from-open-source

2. Outdated APIs.

There's a lot of third-party tools that do this for you and they're all pretty good. However GKE does it automatically in a really smart way.

Basically the web UI warns you if you are relying on outdated APIs and will not upgrade if you are. Super easy to check "do I have bad API calls hiding somewhere".

3. How do I install and manage the ancillary services and what are they?

GKE comes batteries included. DNS is there but it's just a flag in Terraform to configure. Service accounts same thing, Ingress and Gateway to GCP is also just in there working. Hooking up to your VPC through a toggle in Terraform so you can naively routeable. They even reserve the Pods IPs before the pods are created which is nice and eliminates a source of problems.

They have their own CNI which also just works. One end of the Virtual Ethernet Device pair is attached to the Pod and the other is connected to the Linux bridge device cbr0. I've never encountered any problems with any of the GKE defaults, from the subnets it offers to generate for pods to the CNI it is using for networking. The DNS cache is nice to be able to turn on easily.

4. Can I stand up a clean cluster from what I have or was critical stuff added by hand?

Because everything you need to do happens in Terraform for GKE, it's very simple to see if you can stand up another cluster. Load balancing is happening inside of YAMLs, ditto for deployments, so standing up a test cluster and seeing if apps deploy correctly to it is very fast. You don't have to install a million helm charts to get everything configured just right.

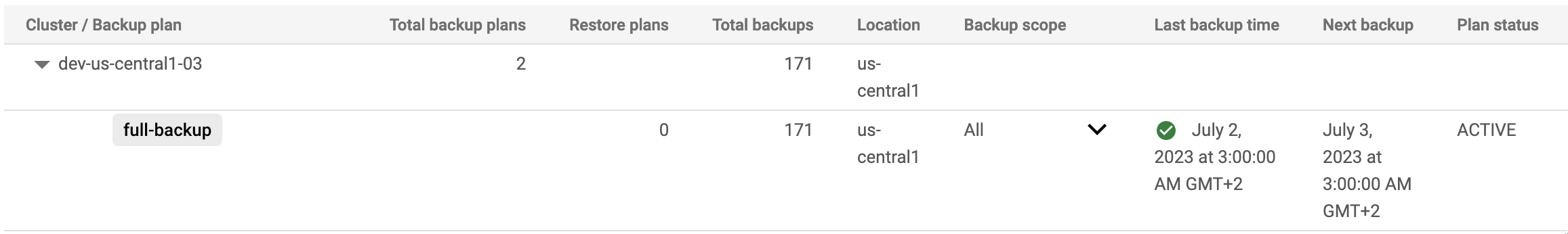

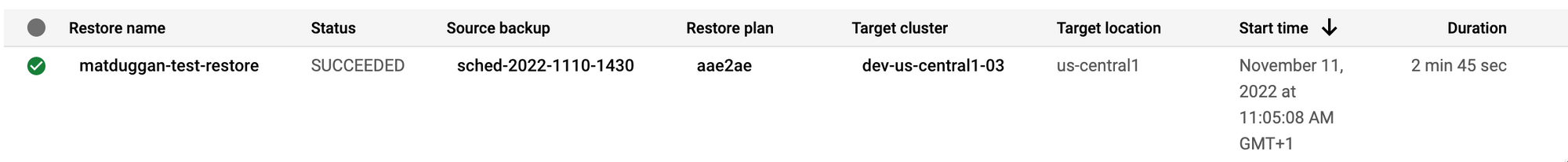

However they ALSO have backup and restore built it!

Here is your backup running happily and restoring it is just as easy to do through the UI.

So if you have a cluster with a bunch of custom stuff in there and don't have time to sort it out, you don't have to.

5. Map out the application dependencies and ensure they're put into place in the right order.

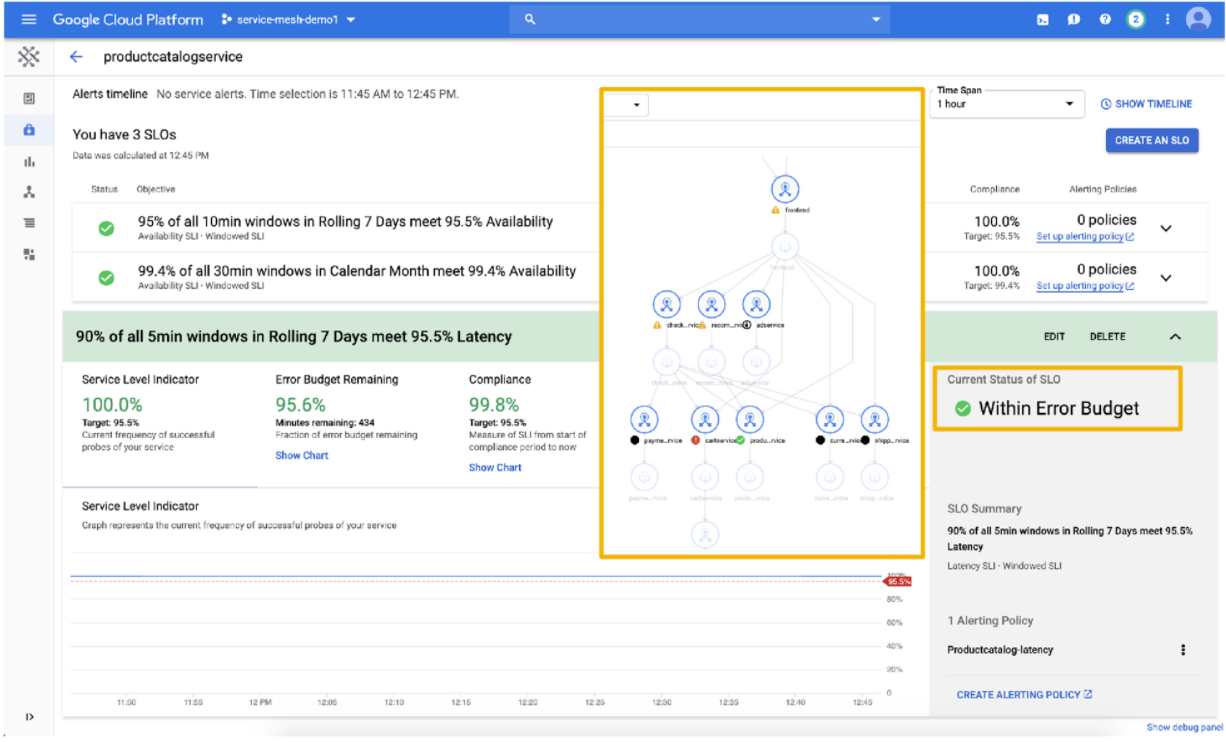

This obviously varies from place to place, but the web UI for GKE does make it very easy to inspect deployments and see what is going on with them. This helps a lot, but of course if you have a service mesh that's going to be the one-stop shop for figuring out what talks to what when. The Anthos service mesh provides this and is easy to add onto a cluster.

6. What controls DNS/load balancing and how can I cut between cluster 1 and cluster 2

Alright so this is the only bad part. GCP load balancers provide zero useful information. I don't know why, or who made the web UIs look like this. Again, making an internal or external load balancer as an Ingress or Gateway with GKE is stupid easy with annotations.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

annotations:

kubernetes.io/ingress.global-static-ip-name: my-static-address

kubernetes.io/ingress.allow-http: "false"

networking.gke.io/managed-certificates: managed-cert

kubernetes.io/ingress.class: "gce"

I don't who this is for or why I would care from what region of the world my traffic is coming from. It's also not showing correctly on Firefox with the screen cut off on the right. For context, this is the correct information I want from a load balancer every single time:

The entire GCP load balancer thing is a tire-fire. The web UI to make load balancers breaks all the time. Adding an SSL through the web UI almost never works. They give you a ton of great information about the backend of the load balancer but adding things like a new TLS policy requires kind of a lot of custom stuff. I could go on and on.

Autopilot

Alright so lets say all of that was still a bit much for you. You want a basic infrastructure where you don't need to think about nodes, or load balancers, or operating systems. You write your YAML, you deploy it to The Cloud and then things happens automagically. That is GKE Autopilot

Here are all the docs on it. Let me give you the elevator pitch. It's a stupid easy way to run Kubernetes that is probably going to save you money. Why? Because selecting and adjusting the type and size of node you provision is something most starting companies mess up with Kubernetes and here you don't need to do that. You aren't billed for unused capacity on your nodes, because GKE manages the nodes. You also aren't charged for system Pods, operating system costs, or unscheduled workloads.

Hardening Autopilot is also very easy. You can see all the options that exist and are already turned on here. If you are a person who is looking to deploy an application where maintaining it cannot be a big part of your week, this is a very flexible platform to do it on. You can move to standard GKE later if you'd like. Want off GCP? It is not that much work to convert your YAML to work with a different hosted provider or a datacenter.

I went in with low expectations and was very impressed.

Why shouldn't I use GKE?

I hinted at it above. As good as GKE is, the rest of GCP is crazy inconsistent. First the project structure for how things work is maddening. You have an organization and below that are projects (which are basically AWS accounts). They all have their own permission structure which can be inherited from folders that you put the projects in. However since GCP doesn't allow for the combination of IAM premade roles into custom roles, you end up needing to write hundreds of lines of Terraform for custom roles OR just find a premade role that is Pretty Close.

GCP excels at networking, data visualization (outside of load balancing), kubernetes, serverless with cloud run and cloud functions and big data work. A lot of the smaller services on the edge don't get a lot of love. If you are heavy users of the following, proceed with caution.

GCP Secret Manager

For a long time GCP didn't have any secret manager, instead having customers encrypt objects in buckets. Their secret manager product is about as bare-bones as it gets. Secret rotation is basically a cron job that pushes to a Pub/Sub topic and then you do the rest of it. No metrics, no compliance check integrations, no help with rotation.

It'll work for most use cases, but there's just zero bells and whistles.

GCP SSL Certificates

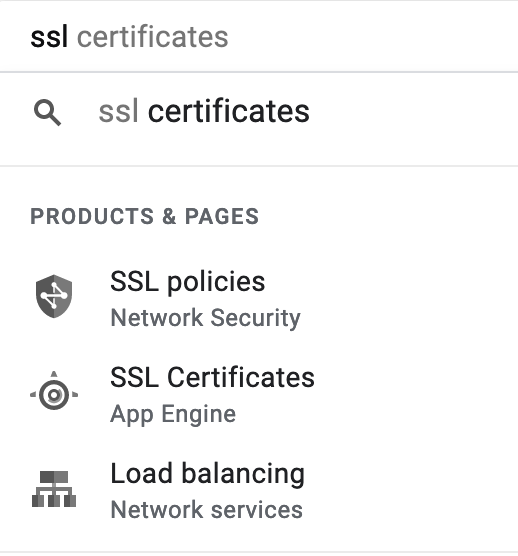

I don't know how Let's Encrypt, a free service, outperforms GCPs SSL certificate generation process. I've never seen a service that mangles SSL certificates as bad as this. Let's start with just trying to find them.

The first two aren't what I'm looking for. The third doesn't take me to anything that looks like an SSL certificate. SSL certificates actually live at Security -> Certificate Manager. If you try to go there even if you have SSL certificates you get this screen.

I'm baffled. I have Google SSL certificates with their load balancers. How is the API not enabled?

To issue the certs it does the same sort of DNS and backend checking as a lot of other services. To be honest I've had more problems with this service issuing SSL certificates than any in my entire life. It was easier to buy certificates from Verisign. If you rely a lot on generating a ton of these quickly, be warned.

IAM recommender

GCP has this great feature which is it audits what permissions a role has and then tells you basically "you gave them too many permissions". It looks like this:

Great right? Now sometimes this service will recommend you modify the permissions to either a new premade role or a custom role. It's unclear when or how that happens, but when it does there is a little lightbulb next to it. You can click it to apply the new permissions, but since mine (and most peoples) permissions are managed in code somewhere, this obviously doesn't do anything long-term.

Now you can push these recommendations to Big Query, but what I want is some sort of JSON or CSV that just says "switch these to use x premade IAM roles". My point is there is a lot of GCP stuff that is like 90% there. Engineers did the hard work of tracking IAM usage, generating the report, showing me the report, making a recommendation. I just need an easier way to act on that outside of the API or GCP web console.

These are just a few examples that immediately spring to mind. My point being when evaluating GCP please kick the tires on all the services, don't just see that one named what you are expecting exists. The user experience and quality varies wildly.

I'm interested, how do I get started?

GCP terraform used to be bad, but now it is quite good. You can see the whole getting started guide here. I recommend trying Autopilot and seeing if it works for you just because its cheap.

Even if you've spent a lot of time running k8s, give GKE a try. It's really impressive, even if you don't intend to move over to it. The security posture auditing, workload metrics, backup, hosted prometheus, etc is all really nice. I don't love all the GCP products, but this one has super impressed me.