Until recently the LLM tools I’ve tried have been, to be frank, worthless. Copilot was best at writing extremely verbose comments. Gemini would turn a 200 line script into a 700 line collection of gibberish. It was easy for me to, more or less, ignore LLMs for being the same over-hyped nonsense as the Metaverse and NFTs.

This is great for me because I understand that LLMs represent a massive shift in power from an already weakened worker class to an increasingly monarch-level wealthy class. By stealing all human knowledge and paying nothing for it, then selling the output of that knowledge, LLMs are an impossibly unethical tool. So if the energy wasting tool of the tech executive class is also a terrible tool, easy choice.

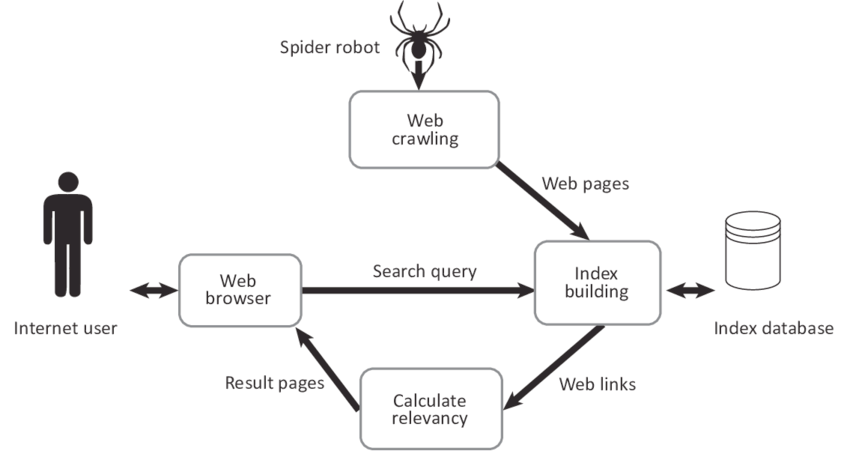

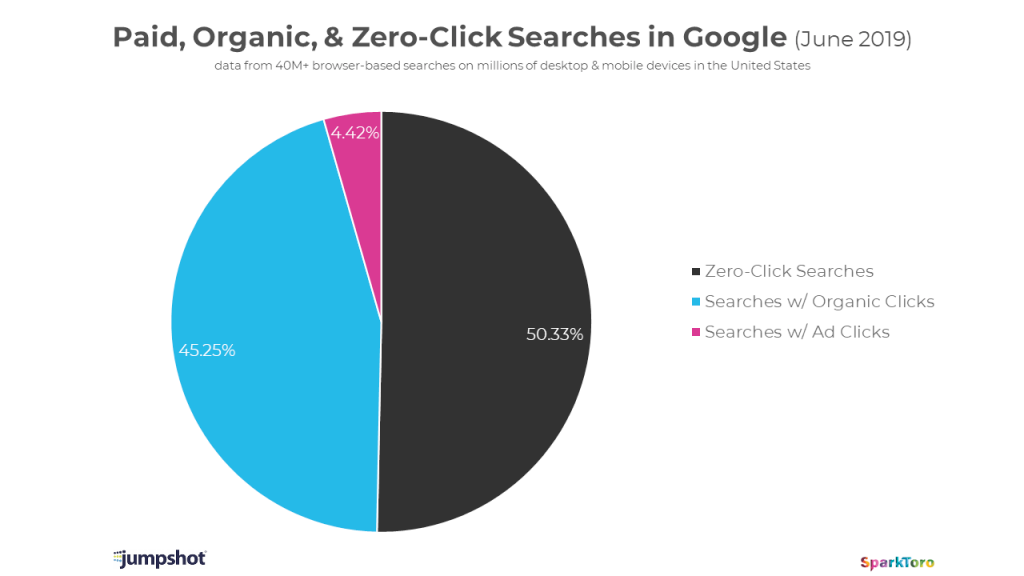

Like boycotting Tesla for being owned by an evil person and also being crappy overpriced cars, or not shopping at Hobby Lobby and just buying directly from their Chinese suppliers, the best boycotts are ones where you aren’t really losing much. Google can continue to choke out independent websites with their AI results that aren’t very good and I get to feel superior doing what I was going to do anyway by not using Google search.

This logic was all super straight forward right up until I tried Claude Code. Then it all got much more complicated.

Some Harsh Truths

Let’s just get this out of the way right off the bat. I didn't want to like Claude Code. I got a subscription with the purpose of writing a review on it where I would find that it was just as terrible as Gemini and Copilot. Except that's not what happened.

Instead it was like discovering the 2AM kebab place might actually make the best pizza in town. I kept asking Claude to do annoying tasks where it was easy for me to tell if it had made a mistake and it kept doing them correctly. It felt impossible but the proof was right in front of me.

I’ve written tens of thousands of lines of Terraform in my life. It is a miserable chore to endlessly flip back and forth between the provider documentation and Vim, adding all the required parameters. I don’t learn anything by doing it, it’s just a grind I have to push through to get back to the meaningful work.

The amount of time I have wasted on this precious time on Earth importing all of a companies DNS records into Terraform, then taking the autogenerated names and organizing them so that they make sense for the business is difficult to express. It's like if the only way I knew how to make a hamburger bun was to carefully put every sesame seed by hand on the top only to stumble upon an 8 pack of buns for $4 at the grocery store after years of using tiny tweezers to put the seeds in exactly the right spot.

I feel the same way about writing robust READMEs, k8s YAML and reorganizing the file structure of projects. Setting up more GitHub Actions is as much fun as doing my taxes. If I never had to write another regex for the rest of my life, that would be a better life by every conceivable measure.

These are tasks that sap my enthusiasm for this type of work, not feed it. I’m not sad to offload them and switch to mostly reviewing its PRs.

But the tool being useful doesn’t remove what’s bad about it. This is where a lot of pro-LLM people start to delude themselves.

Pro-LLM Arguments

In no particular order are the arguments I keep seeing about LLMs from people who want to keep using them for why their use is fine.

IP/Copyright Isn’t Real

This is the most common one I see and the worst. It can be condensed down to “because most things on the internet originally existed to find pornography and/or pirate movies, stealing all content on the internet is actually fine because programmers don’t care about copyright”.

You also can’t have it both ways. OpenAI can’t decide to enforce NDAs and trademarks and then also declare law is meaningless. If I don’t get to launch a webmail service named Gmail+ then Google doesn’t get to steal all the books in human existence.

The argument basically boils down to: because we all pirated music in 2004, intellectual property is a fiction when it stands in the way of technology. By this logic I shoplifted a Snickers bar when I was 12 so property rights don't exist and I should be allowed to live in your house.

Code Quality Doesn't Matter (According to Someone Who Might Be Right)

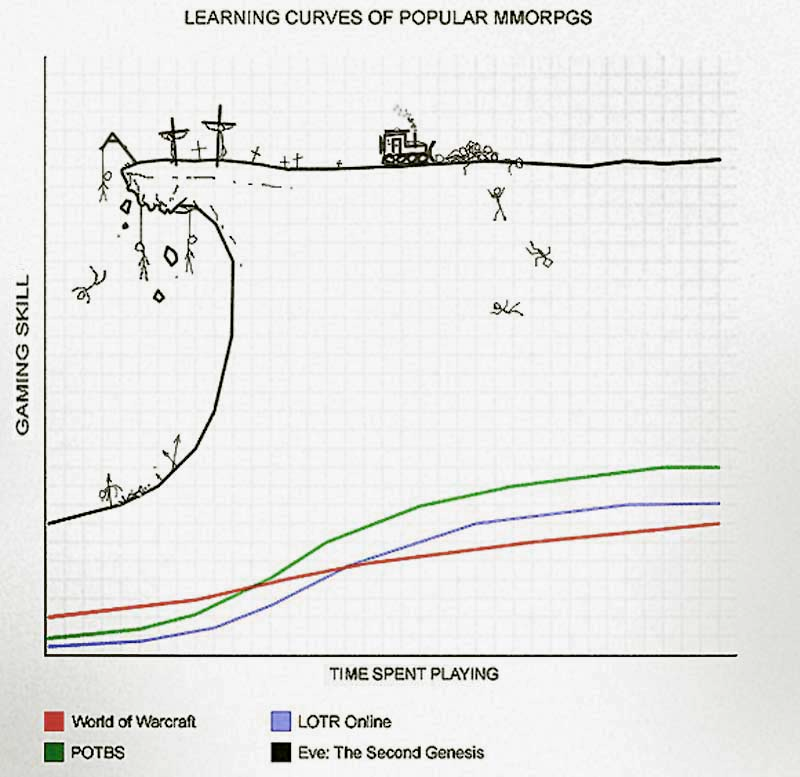

I have an internet friend I met years ago playing EVE Online that is a brutally pragmatic person. To someone like him, code craftsmanship is a joke. For those of you who are unaware, EVE Online is the spaceship videogame where sociopaths spend months plotting against each other.

His approach to development is 80% refining requirements and getting feedback. He doesn’t care at all about DRY, he uses Node because then he can focus on just JavaScript, he doesn’t invest a second into optimization until the application hits a hard wall that absolutely requires it. His biggest source of clients? Creating fast full stacks because internal teams are missing deadlines. And he is booked up for at least 12 months out all the time because he hits deadlines.

When he started freelancing I thought he was crazy. Who was going to hire this band of Eastern European programmers who chain smoke during calls and whose motto is basically "we never miss a deadline". As it turns out, a lot of people.

Why doesn't he care?

Why doesn't he care about these things? He believes that programmers fundamentally don't understand the business they are in. "Code is perishable" is something he says a lot and he means it. Most of the things we all associate with quality (full test coverage, dependency management, etc) are programmers not understanding the rate of churn a project undergoes over its lifespan. The job of a programmer, according to him, is delivering features that people will use. How pleasant and well-organized that code is to work with is not really a thing that matters in the long term.

He doesn't see LLM-generated code as a problem because he's not building software with a vision that it will still be used in 10 years. Most of the stuff typically associated with quality he, more or less, throws in the trash. He built a pretty large stack for a automotive company and my jaw must have hit the table when he revealed they're deploying m6g.4xlarge for a NodeJS full-stack application. "That seems large to me for that type of application" was my response.

He was like "yeah but all I care about are whether the user metrics show high success rate and high performance for the clients". It's $7000 a year for the servers, with two behind a load balancer. That's absolutely nothing when compared with the costs of what having a team of engineers tune it would cost and it means he can run laps around the internal teams who are, basically, his greatest competition.

To be clear, he is very technically competent. He simply rejects a lot of the conventional wisdom out there about what one has to do in order to make stuff. He focuses on features, then securing endpoints and more or less gives up on the rest of it. For someone like this, LLMs are a logical choice for him.

Why This Argument Doesn't Work for Me

The annoying thing about my friend is that his bank account suggests he's right. But I can't get there. If I'm writing a simple script or something as a one-off, it can sometimes feel like we're all wasting the companies time when we have a long back and forth on the PR discussing comments or the linting or whatever. So it's not that this idea is entirely wrong.

But the problem with programming is you never know what is going to be "the core" of your work life for the next 5 years. Sometimes I write a feature, we push it out, it explodes in popularity and then I'm a little bit in trouble because I built a MVP and now it's a load-bearing revenue generating thing that has to be retooled.

I also just have trouble with the idea that this is my career and the thing I spend my limited time on earth doing and the quality of it doesn't matter. I delight in craftsmanship when I encounter it in almost any discipline. I love it when you walk into an old house and see all the hand crafted details everywhere that don't make economic sense but still look beautiful. I adore when someone has carefully selected the perfect font to match something.

Every programmer has that library or tool that they aspire to. That code base where you delight at looking at it because it proves perfection is possible even if you have never come close to reaching that level. For me its always been looking through the source code of SQLite that restores my confidence. I might not know what I'm doing but it's good to be reminded that someone out there does.

Not everything I make is that great, but the concept of "well great doesn't matter at all" effectively boils down to "don't take pride in your work" which is probably the better economic argument but feels super bad to me. In a world full of cheap crap, it feels bad to make more of it and then stick my name on it.

So Why Are People Still Using Them?

The best argument for why programmers should be using LLMs is because it's going to be increasingly difficult to compete for jobs and promotions against people who are using them. In my experience Claude Code allows me to do two tasks at once. That's a pretty hard advantage to overcome.

Last Tuesday I had Claude Code write a GitHub Action for me while I worked on something else. When it was done, I reviewed it, approved it, and merged it. It was fine. It was better than fine, actually — it was exactly what I would have written, minus the forty-five minutes of resentment. I sat there for a moment, staring at the merged PR, feeling the way I imagine people feel when they hire a cleaning service for the first time: relieved, and then immediately guilty about the relief, and then annoyed at myself for feeling guilty about something that is, by any rational measure, a completely reasonable thing to do. Except it isn't reasonable. Or maybe it is. I genuinely don't know anymore, and that's the part that bothers me the most — not that the tool works, but that I've lost the clean certainty that it shouldn't.

So now I'm paying $20 a month to a company that scraped the collective knowledge of humanity without asking so that I can avoid writing Kubernetes YAML. I know what that makes me. I just haven't figured out a word for it yet that I can live with.

When I asked my EVE friend about it on a recent TeamSpeak session, he was quiet for awhile. I thought that maybe my moral dilemma had shocked him into silence. Then he said, "You know what the difference is between you and me? I know I'm a mercenary. You thought you were an artist. We're both guys who type for money."

I couldn't think of a clever response to that. I still can't.